Difference between revisions of "Information theory"

Jump to navigation

Jump to search

| (38 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

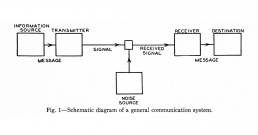

| − | + | [[Image:Shannon_Claude_E_1948_General_communication_system_diagram.jpg|thumb|258px|Diagram of a general communication system from Claude E. Shannon, ''A Mathematical Theory of Communication'', 1948.]] | |

| − | + | '''Information theory''' is a branch of applied mathematics, electrical engineering, and computer science which originated primarily in the work of [[Claude Shannon]] and his colleagues in the 1940s. It deals with concepts such as information, entropy, information transmission, data compression, coding, and related topics. Paired with simultaneous developments in cybernetics, and despite the criticism of many, it has been subject to wide-ranging interpretations and applications outside of mathematics and engineering. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | This page outlines a bibliographical genealogy of information theory in the United States, France, Soviet Union, and Germany in the 1940s and 1950s, followed by a selected bibliography on its impact across the sciences. | |

| − | |||

| − | |||

| − | and | ||

| − | Shannon's theory | + | ==Bibliography== |

| − | + | {{refbegin}} | |

| − | + | [[Image:Shannon_Claude_E_1945_A_Mathematical_Theory_of_Cryptography.jpg|thumb|258px|Claude E. Shannon, ''A Mathematical Theory of Cryptography'', 1945. [http://archive.org/stream/ShannonMiscellaneousWritings#page/n181/mode/2up View online].]] | |

| − | + | [[Image:Shannon_Claude_E_1948_A_Mathematical_Theory_of_Communication_offprint.jpg|thumb|258px|Claude E. Shannon, ''A Mathematical Theory of Communication'', 1948. [[Media:Shannon_Claude_E_A_Mathematical_Theory_of_Communication_1957.pdf|Download]] (monograph).]] | |

| − | + | [[Image:Shannon_Claude_E_Weaver_Warren_The_Mathematical_Theory_of_Communication.jpg|thumb|258px|Claude E. Shannon, Warren Weaver, ''The Mathematical Theory of Communication'', 1949. [[Media:Shannon_Claude_E_Weaver_Warren_The_Mathematical_Theory_of_Communication_1963.pdf|Download]] (1963 edition).]] | |

| − | + | ===Shannon's information theory (1948)=== | |

| − | + | * Harry Nyquist, [[Media:Nyquist_Harry_1924_Certain_Factors_Affecting_Telegraph_Speed.pdf|"Certain Factors Affecting Telegraph Speed"]], ''Journal of the AIEE'' 43:2 (February 1924), pp 124-130; repr. in ''Bell System Technical Journal'', Vol. 3 (April 1924), pp 324-346. Presented at the Midwinter Convention of the AIEE, Philadelphia, February 1924. Shows that a certain bandwidth was necessary in order to send telegraph signals at a definite rate. Considers two fundamental factors for the maximum speed of transmission of 'intelligence' [not information] by telegraph: signal shaping and choice of codes. Used in Shannon 1948. | |

| − | + | * Harry Nyquist, [[Media:Nyquist_Harry_1928_Certain_Topics_in_Telegraph_Transmission_Theory.pdf|"Certain Topics in Telegraph Transmission Theory"]], ''Transactions of AIEE'', Vol. 47 (April 1928), pp 617-644; [http://web.archive.org/web/20060706192816/http://www.loe.ee.upatras.gr/Comes/Notes/Nyquist.pdf repr. in] ''Proceedings of the IEEE'' 90:2 (February 2002), pp 280-305. Presented at the Winter Convention of the AIEE in New York in February 1928. Argues for the steady-state system over the method of transients for determining the distortion of telegraph signals. In this and his 1924 paper, Nyquist determines that the number of independent pulses that could be put through a telegraph channel per unit time is limited to twice the bandwidth of the channel; this rule is essentially a dual of what is now known as the Nyquist–Shannon sampling theorem. Used in Shannon 1948. | |

| − | + | * Ralph V.L. Hartley, [[Media:Hartley_Ralph_VL_1928_Transmission_of_Information.pdf|"Transmission of Information"]], ''Bell System Technical Journal'' 7:3 (July 1928), pp 535-563. Presented at the International Congress of Telegraphy and Telephony, Lake Como, Italy, September 1927. Uses the word ''information'' as a measurable quantity, and opts for logarithmic function as its measure, when the information in a message is given by the logarithm of the number of possible messages: H = n log S, where ''S'' is the number of possible symbols, and ''n'' the number of symbols in a transmission. Used in Shannon 1948. | |

| + | * Claude E. Shannon, ''[http://archive.org/stream/ShannonMiscellaneousWritings#page/n181/mode/2up A Mathematical Theory of Cryptography]'', Memorandum MM 45-110-02, Bell Laboratories, 1 September 1945, 114 pages + 25 figures; repr. in Shannon, ''Miscellaneous Writings'', eds. N.J.A. Sloane and Aaron D. Wyner, AT&T Bell Laboratories, 1993. Classified. Redacted and pubished in 1949 (see below). Shannon's first lengthy treatise on the transmission of "information." [http://www.cs.bell-labs.com/who/dmr/pdfs/shannoncryptshrt.pdf] | ||

| + | * Claude E. Shannon, [[Media:Shannon_Claude_E_1948_A_Mathematical_Theory_of_Communication.pdf|"A Mathematical Theory of Communication"]], ''Bell System Technical Journal'' 27 (July, October 1948), pp 379-423, 623-656. [[Media:Shannon_Claude_E_A_Mathematical_Theory_of_Communication.pdf|Reprinted as]] Monograph B-1598, Bell Telephone System Technical Publications, 83 pp; repr. December 1957. | ||

| + | ** "Statisticheskaia teoriia peredachi elektricheskikh signalov", in ''Teoriya peredakhi elektrikheskikh signalov pri nalikhii pomekh'', ed. Nikolai A. Zheleznov, Moscow: IIL, 1953. (in Russian, details below) | ||

| + | ** "Matematicheskaya teoriya svyazi", trans. S. Karpov, in ''[http://padabum.com/data/%D0%9A%D1%80%D0%B8%D0%BF%D1%82%D0%BE%D0%B3%D1%80%D0%B0%D1%84%D0%B8%D1%8F/%D0%A0%D0%B0%D0%B1%D0%BE%D1%82%D1%8B%20%D0%BF%D0%BE%20%D1%82%D0%B5%D0%BE%D1%80%D0%B8%D0%B8%20%D0%B8%D0%BD%D1%84%D0%BE%D1%80%D0%BC%D0%B0%D1%86%D0%B8%D0%B8%20%D0%B8%20%D0%BA%D0%B8%D0%B1%D0%B5%D1%80%D0%BD%D0%B5%D1%82%D0%B8%D0%BA%D0%B5%20(1963)%20-%20%D0%A8%D0%B5%D0%BD%D0%BD%D0%BE%D0%BD.pdf Raboty po teorii informatsii i kibernetike]'', Moscow: IIL, 1963, pp 243-332. (in Russian, details below) | ||

| + | * Robert M. Fano, ''The Transmission of Information'', Technical Reports No. 65 (17 March 1949) and No. 149 (6 February 1950), Research Laboratory of Electronics, MIT. A similar coding technique like Shannon's, only deducted differently. In 1952 optimised by his student, Huffman (see below). | ||

| + | * Warren Weaver, [[Media:Weaver_Warren_1949_The_Mathematics_of_Communication.pdf|"The Mathematics of Communication"]], ''Scientific American'' 181:1 (July 1949), pp 11-15; [[Media:Weaver_Warren_1949_1973_The_Mathematics_of_Communication.pdf|repr. in]] ''Basic Readings in Communication Theory'', ed. C. David Mortensen, Harper & Row, 1973, pp 27-38. [http://www.scientificamerican.com/article/the-mathematics-of-communication/] | ||

| + | * Claude E. Shannon, Warren Weaver, ''[[Media:Shannon_Claude_E_Weaver_Warren_The_Mathematical_Theory_of_Communication_1963.pdf|The Mathematical Theory of Communication]]'', Urbana: University of Illinois Press, 1949, 117 pp; 1963; 1969; 1971; 1972; 1975; 1998. Reviews: [http://www.jstor.org/stable/410457 Hockett] (1953, EN). Consists of two texts: Shannon's 1948 paper (pp 3-91), and Weaver's "Recent contributions to the mathematical theory of communication", an edited version of his July 1949 paper (pp 95-117), and . [http://archive.org/details/mathematicaltheo00shan] [http://raley.english.ucsb.edu/wp-content/Engl800/Shannon-Weaver.pdf] | ||

| + | ** ''コミュニケーションの数学的理論'', trans. Atsushi Hasegawa, 明治図書, 1969; 2001. {{jp}} | ||

| + | ** ''La teoria matematica delle comunicazioni'', trans. Paolo Cappelli, Milan: Etas Kompass, 1971. {{it}} | ||

| + | ** ''Mathematische Grundlagen der Informationstheorie'', Munich and Vienna: Oldenbourg, 1976. {{de}}. [http://www.dandelon.com/servlet/download/attachments/dandelon/ids/DE0043BD54364F846B4A4C1257A14003AB44C.pdf Contents]. | ||

| + | ** ''通信の数学的理論'', trans. Uematsu Tomohiko, 筑摩書房, 2009, 231 pp. {{jp}} | ||

| + | * Claude E. Shannon, [http://www3.alcatel-lucent.com/bstj/vol28-1949/articles/bstj28-4-656.pdf "Communication Theory of Secrecy Systems"], ''Bell System Technical Journal'' 28 (October 1949), pp 656-715; repr. as a monograph, New York: American Telegraph and Telephone Company, 1949. Unclassified revision of Shannon's memorandum ''A Mathematical Theory of Cryptography'', 1945, which was still classified (until 1957). [http://archive.org/stream/bstj28-4-656#page/n0/mode/2up] | ||

| + | ** "Teoriya svyazi v sekretnykh sistemakh" [Теория связи в секретных системах], trans. С. Карпов, in ''[http://padabum.com/data/%D0%9A%D1%80%D0%B8%D0%BF%D1%82%D0%BE%D0%B3%D1%80%D0%B0%D1%84%D0%B8%D1%8F/%D0%A0%D0%B0%D0%B1%D0%BE%D1%82%D1%8B%20%D0%BF%D0%BE%20%D1%82%D0%B5%D0%BE%D1%80%D0%B8%D0%B8%20%D0%B8%D0%BD%D1%84%D0%BE%D1%80%D0%BC%D0%B0%D1%86%D0%B8%D0%B8%20%D0%B8%20%D0%BA%D0%B8%D0%B1%D0%B5%D1%80%D0%BD%D0%B5%D1%82%D0%B8%D0%BA%D0%B5%20(1963)%20-%20%D0%A8%D0%B5%D0%BD%D0%BD%D0%BE%D0%BD.pdf Raboty po teorii informatsii i kibernetike]'' [Работы по теории информации и кибернетике], Moscow: IIL (ИИЛ), 1963, pp 333-402. {{ru}} [http://gen.lib.rus.ec/book/index.php?md5=73C402108312926730611D5A64514BBB&open=0] | ||

| + | * David A. Huffman, [http://www.ias.ac.in/resonance/Volumes/11/02/0091-0099.pdf A Method for the Construction of Minimum-Redundancy Codes"], ''Proceedings of the I.R.E.'' 40 (September 1952), pp 1098–1102. Fano's student; developed an algorithm for efficient encoding of the output of a source. Later became popular in compression tools. | ||

| + | * Claude E. Shannon, [http://archive.org/stream/ShannonMiscellaneousWritings#page/n617/mode/2up "Information Theory"], Seminar Notes, MIT, from 1956, in Shannon, ''Miscellaneous Writings'', eds. N.J.A. Sloane and Aaron D. Wyner, AT&T Bell Laboratories, 1993. | ||

| + | {{:Cybernetics}} | ||

| − | + | ===Popularisation of information theory in the United States (1950s)=== | |

| − | + | * Charles Eames, Ray Eames, ''[http://www.archive.org/details/communications_primer A Communication Primer]'', 16 mm, 1953, 21 min. An educational film aimed at students, sponsored by IBM and distributed by Museum of Modern Art. | |

| − | of | + | {| align="right" |

| + | |- | ||

| + | |{{#widget:Html5media|url=http://archive.org/download/communications_primer/communications_primer_512kb.mp4|width=265|height=220}} | ||

| + | |} | ||

| + | * Francis Bello, "The Information Theory", ''Fortune'', Vol. 48 (December 1953), pp 136-158. | ||

| + | * ''The Search'', 1954. Documentary film featuring Shannon, Forrester and Wiener, produced by NBC. | ||

| + | * Stanford Goldman, ''Information Theory'', Prentice-Hall, 1953, 385 pp; New York: Dover, 1968; 2005. | ||

| + | ** S. Goldman (С. Голдман), ''Teoriya informatsii'' [Теория информации], Мoscow, 1957. {{ru}} | ||

| + | * Léon Brillouin, ''Science and Information Theory'', New York: Academic Press, 1956. A bestseller rewrite of physics using information theory. | ||

| + | * ''Information and Control'' journal, *1958. Founding editors: Léon Brillouin, Colin Cherry, Peter Elias. | ||

| − | + | [[Image:Shannon_Claude_E_1956_The_Bandwagon.jpg|thumb|258px|Claude E. Shannon, "The Bandwagon", 1956. [[Media:Shannon_Claude_E_1956_The_Bandwagon.pdf|Download]].]] | |

| − | + | ===Debate in ''Transactions'' (1955-56)=== | |

| − | + | * L.A. De Rosa, "In Which Fields Do We Graze?", ''I.R.E. Transactions on Information Theory'' 1 (December 1955). Editorial by the chairman of the Professional Group on Information Theory: "The expansion of the applications of Information Theory to fields other than radio and wired communications has been so rapid that oftentimes the bounds within which the Professional Group interests lie are questioned. Should an attempt be made to extend our interests to such fields as management, biology, psychology, and linguistic theory, or should the concentration be strictly in the direction of communication by radio or wire?" | |

| − | + | * Claude E. Shannon, [[Media:Shannon_Claude_E_1956_The_Bandwagon.pdf|"The Bandwagon"]], ''I.R.E. Transactions on Information Theory'' 2 (1956), p 3. Shannon's call for keeping the information theory "an engineering problem": "Workers in other fields should realize that the basic results of the subject are aimed in a very specific direction, a direction that is not necessarily relevant to such fields as psychology, economics, and other social sciences.. [T]he establishing of such applications is not a trivial matter of translating words to a new domain, but rather the slow tedious process of hypothesis and verification. If, for example, the human being acts in some situations like an ideal decoder, this is an experimental and not a mathematical fact, and as such must be tested under a wide variety of experimental situations." | |

| − | + | ** [http://www.vbvvbv.narod.ru/bandwagon.htm "Bandvagon"] [Бандвагон], trans. S. Karpov, in ''[http://padabum.com/data/%D0%9A%D1%80%D0%B8%D0%BF%D1%82%D0%BE%D0%B3%D1%80%D0%B0%D1%84%D0%B8%D1%8F/%D0%A0%D0%B0%D0%B1%D0%BE%D1%82%D1%8B%20%D0%BF%D0%BE%20%D1%82%D0%B5%D0%BE%D1%80%D0%B8%D0%B8%20%D0%B8%D0%BD%D1%84%D0%BE%D1%80%D0%BC%D0%B0%D1%86%D0%B8%D0%B8%20%D0%B8%20%D0%BA%D0%B8%D0%B1%D0%B5%D1%80%D0%BD%D0%B5%D1%82%D0%B8%D0%BA%D0%B5%20(1963)%20-%20%D0%A8%D0%B5%D0%BD%D0%BD%D0%BE%D0%BD.pdf Raboty po teorii informatsii i kibernetike]'' [Работы по теории информации и кибернетике], Moscow: IIL (ИИЛ), 1963, pp 667-668. {{ru}} | |

| − | + | * Norbert Wiener, "What Is Information Theory?", ''I.R.E. Transactions on Information Theory'' 3 (June 1956), p 48. A rejection of Shannon's narrowing focus and insistance that "information" remain part of a larger indissociable ensemble including all the sciences: "I am pleading in this editorial that Information Theory...return to the point of view from which it originated: that of the general statistical concept of communication.. What I am urging is a return to the concepts of this theory in its entirety rather than the exaltation of one particular concept of this group, the concept of the measure of information into the single dominant idea of all." | |

| − | == | + | ===Historical analysis and review of the impact of information theory=== |

| − | + | ====In mathematics, engineering and computing==== | |

| − | + | ; Written by engineers and mathematicians | |

| − | + | * Colin Cherry, "A History of the Theory of Information", ''IRE Transactions on Information Theory'' 1:1 (1953), pp 22-43. | |

| − | + | * Louis H. M. Stumpers, "A Bibliography on Information Theory (Communication Theory - Cybernetics)", ''I.R.E. Transactions on Information Theory'' 1 (1955), pp 31-47. | |

| − | + | * John Pierce, "The Early Days of Information Theory", ''IEEE Transactions of Information Theory'' 19:1 (January 1973), pp 3-8. [http://ieeexplore.ieee.org/xpl/login.jsp?tp=&arnumber=1054955] | |

| − | + | * William Aspray, [[Media:Aspray_William_1985_Scientific_Conceptualization_of_Information_A_Survey.pdf|"Scientific Conceptualization of Information: A Survey"]], ''Annals of the History of Computing'' 7:2 (April 1985), pp 117-140. | |

| − | + | * Karl L. Wildes, Nilo A. Lindgren, "The Research Laboratory of Electronics", in ''[http://gen.lib.rus.ec/get?md5=1075a08841e2ddfc243c53079110e2d5&open=0 A Century of Electrical Engineering and Computer Science at MIT, 1882-1982]'', MIT Press, 1985, p 242-278. | |

| + | * E.M. Rogers, T.W. Valente, [http://books.google.com/books?id=cqF9zfmDjL8C&pg=PA39 "A History of Information Theory in Communication"], in ''Between Communication and Information, Vol. 4: Information and Behavior'', eds. J.R. Schement and B.D. Ruben, New Brunswick, NJ: Transaction, 1993, pp 35-56. | ||

| + | * Sergio Verdú, [http://www.dks.rub.de/mam/pdf/downloads/50yearsshannon.pdf "Fifty Years of Shannon Theory"], ''IEEE Transactions on Information Theory'' 44:6 (1998), pp 2057-2078. | ||

| + | * Ronald R. Kline, [http://www.asis.org/History/01-kline.pdf "What Is Information Theory a Theory Of? Boundary Work Among Scientists in the United States and Britain During the Cold War"], in ''The History and Heritage of Scientific and Technical Information Systems: Proceedings of the 2002 Conference, Chemical Heritage Foundation'', eds. W. Boyd Rayward and Mary Ellen Bowden, Medford, NJ: Information Today, 2004, pp 15-28. | ||

| + | * Lav Varshney, [http://www.ieee.org/portal/cms_docs_iportals/iportals/aboutus/history_center/conferences/che2004/Varshney.pdf "Engineering Theory and Mathematics in the Early Development of Information Theory"], ''IEEE Conf. History of Electronics'', 2004, pp 1-6. | ||

| + | * Ya.I. Fet (ed.), ''[http://www.computer-museum.ru/books/cibernetics_hist.pdf Iz istorii kibernetiki]'', Novosibirsk: Geo, 2006, 339 pp. {{ru}} | ||

| − | + | [[Image:Hayles_N_Katherine_How_We_Became_Posthuman_Virtual_Bodies_in_Cybernetics_Literature_and_Informatics.jpg|thumb|258px|N. Katherine Hayles, ''How We Became Posthuman: Virtual Bodies in Cybernetics, Literature, and Informatics'', 1999. [[Media:Hayles_N_Katherine_How_We_Became_Posthuman_Virtual_Bodies_in_Cybernetics_Literature_and_Informatics.pdf|Download]].]] | |

| − | + | ; Written by historians and theorists | |

| − | + | * Peter Galison, [http://jerome-segal.de/Galison94.pdf "The Ontology of the Enemy: Norbert Wiener and the Cybernetic Vision"], ''Critical Inquiry'' 21:1 (Autumn 1994), pp 228-266. Demonstrates the significance of the war effort of devising a servomechanical shooting device, in which Wiener participated, as a defining moment in the elaboration of the cybernetic model. | |

| − | + | * N. Katherine Hayles, ''[http://monoskop.org/log/?p=2718 How We Became Posthuman: Virtual Bodies in Cybernetics, Literature, and Informatics]'', University of Chicago Press, 1999, 350 pp. | |

| − | + | * Jean-Pierre Dupuy, ''The Mechanization of the Mind: On the Origins of Cognitive Science'', trans. M. B. Debevoise, Princeton University Press, 2000, 240 pp. A survey of the Macy conferences. [http://web.archive.org/web/20070205052307/http://www.cogs.susx.ac.uk/users/ezequiel/dupuy.pdf Review], [http://www.asc-cybernetics.org/foundations/historyrefs.htm]. | |

| − | + | * David A. Mindell, ''Between Human and Machine: Feedback, Control, and Computing before Cybernetics'', Johns Hopkins University Press, 2002, 439 pp. [http://www.asc-cybernetics.org/foundations/historyrefs.htm] | |

| − | + | * Slava Gerovitch, [http://scribd.com/doc/201138190/ "'Russian Scandals': Soviet Readings of American Cybernetics in the Early Years of the Cold War"], ''The Russian Review'' 60 (October 2001), pp 545-568. | |

| − | + | * David Mindell, Jérôme Segal, Slava Gerovitch, [http://www.infoamerica.org/documentos_word/shannon-wiener.htm "From Communications Engineering to Communications Science: Cybernetics and Information Theory in the United States, France, and the Soviet Union"], in ''Science and Ideology: A Comparative History'', ed. Mark Walker, London and New York: Routledge, 2002, pp 66-96. [http://jerome-segal.de/Publis/science_and_ideology.rtf] | |

| − | + | * Jérôme Segal, ''Le zéro et le un: histoire de la notion scientifique d’information au 20e siécle'', Paris: Syllepse, 2003, 906 pp; 2011. {{fr}} [http://www.materiologiques.com/IMG/pdf/0_1-Sommaire.pdf Contents], [http://www.syllepse.net/index.phtml?iprod=210&ForcerVueProduit=1&FromForcerV], [http://juliebouchard.online.fr/articles-pdf/2005b-bouchard-information.pdf Review], [http://www.persee.fr/web/revues/home/prescript/article/hel_0750-8069_2007_num_29_1_2918_t10_0171_0000_1 Review]. | |

| − | + | * Slava Gerovitch, ''[http://monoskop.org/log/?p=257 From Newspeak to Cyberspeak: A History of Soviet Cybernetics]'', MIT Press, 2004, 383 pp. | |

| − | the | + | * Yongdong Peng, "The Early Diffusion of Cybernetics in China (1929-1960)", ''Studies in the History of the Natural Sciences'', vol. 23, 2004, pp. 299-318. {{zh}} [http://en.cnki.com.cn/Article_en/CJFDTOTAL-ZRKY200404002.htm] [http://english.ihns.cas.cn/sp/pb/shns/201011/t20101109_61105.html] |

| − | + | * Bernard Dionysius Geoghegan, [http://bernardg.com/sites/default/files/pdf/GeogheganHistoriographicConception.pdf "The Historiographic Conception of Information: A Critical Survey"], ''The IEEE Annals on the History of Computing'' 30:1 (2008), pp 66-81. | |

| + | * Mathieu Triclot, ''Le moment cybernétique: la constitution de la notion d’information'', Seyssel: Champ Callon, 2008. {{fr}} | ||

| + | * Axel Roch, ''Claude E. Shannon. Spielzeug, Leben und die geheime Geschichte seiner Theorie der Information'', Berlin: gegenstalt, 2009, 256 pp; 2nd ed., 2010. {{de}} [http://moon.zkm.de/hp/pdf/shannon_preview.pdf Excerpt], [http://www.perlentaucher.de/buch/axel-roch/claude-e-shannon.html]. | ||

| + | * Andrew Pickering, ''[http://monoskop.org/log/?p=1531 The Cybernetic Brain: Sketches of Another Future]'', Chicago University Press, 2010, 526 pp. Renders a history of British cybernetics with only brief excursions into information theory. | ||

| + | * Pierre-Éric Mounier-Kuhn, ''L’Informatique en France, de la seconde guerre mondiale au Plan Calcul. L’émergence d’une science'', Paris: PUPS, 2010, 720 pp. {{fr}} [http://pups.paris-sorbonne.fr/pages/aff_livre.php?Id=838] | ||

| + | * James Gleick, ''[http://monoskop.org/log/?p=1857 The Information: A History, A Theory, A Flood]'', Knopf, 2011, 496 pp. | ||

| + | * Ronan Le Roux, ''Une histoire de la cybernétique en France, 1948-1970'', Paris: Classiques Garnier, 2013. {{fr}} | ||

| − | + | [[Image:Vocal_apparatus_and_Voder_ATT_pamphlet.jpg|thumb|258px|Bernard Dionysus Geoghegan, ''The Cybernetic Apparatus: Media, Liberalism, and the Reform of the Human Sciences'', 2012, [https://monoskop.org/log/?p=9200 Log].]] | |

| − | + | ====In the social sciences and humanities==== | |

| − | + | * N. Katherine Hayles, [http://books.google.com/books?id=1hbSLwGRdt4C&pg=PA119 "Information or Noise? Economy of Explanation in Barthes’s ''S/Z'' and Shannon’s Information Theory"], in ''One Culture: Essays in Science and Literature'', ed. George Levine, Madison: University of Wisconsin Press, 1987, pp 119-142. [http://uwpress.wisc.edu/books/1445.htm] | |

| + | * Steve J. Heims, ''Constructing a Social Science for Postwar America: The Cybernetics Group (1946–1953)'', MIT Press, 1993. A survey of the Macy conferences and dissemination of information theory outside the natural sciences. [http://web.mit.edu/esd.83/www/notebook/HeimsReview.pdf Review], [http://www.asc-cybernetics.org/foundations/historyrefs.htm]. | ||

| + | * Jérôme Segal, "Les sciences humaines et la notion scientifique d'information", in ''Le zéro et le un: histoire de la notion scientifique d’information au 20e siécle'', Paris: Syllepse, 2003; 2011, pp 487-534. {{fr}} [http://www.syllepse.net/index.phtml?iprod=210&ForcerVueProduit=1&FromForcerV] | ||

| + | * Céline Lafontaine, ''L’Empire cybernétique: des machines à penser à la pensée machine'' [The Cybernetic Empire: From Machines for Thinking to the Thinking Machine], Paris: Seuil, 2004. {{fr}}. [http://www.monde-diplomatique.fr/2004/07/RIEMENS/11459 Review]. | ||

| + | * Céline Lafontaine, [http://jerome-segal.de/Lafontaine07.pdf "The Cybernetic Matrix of 'French Theory'"], ''Theory, Culture & Society'' 24:5 (2007), pp 27-46. On the influence of cybernetics on the development of French structuralism, post-structuralism and postmodern philosophy after WWII. | ||

| + | * Michael Hagner, Erich Hörl (eds.), ''Die Transformationen des Humanen'', Suhrkamp, 2008, 450 pp. {{de}}. [http://www.suhrkamp.de/download/Blickinsbuch/9783518294482.pdf Contents and Introduction], [http://www.suhrkamp.de/buecher/die_transformation_des_humanen-_29448.html]. | ||

| + | * Jürgen Van de Walle, "Roman Jakobson, Cybernetics and Information Theory: A Critical Assessment", ''Folia Linguistica Historica'' 29 (December 2008), pp 87-123. | ||

| + | * Ronan Le Roux, [http://www.cairn.info/revue-l-homme-2009-1-page-165.htm "Lévi-Strauss, une reception paradoxale de la cybernétique"], ''L’Homme'' 189 (January-March 2009), pp 165-190. {{fr}} | ||

| + | * Bruce Clarke, [http://pages.uoregon.edu/koopman/courses_readings/phil123-net/intro/mitchell_ed_crit-terms_3chs.pdf "Information"], in ''Critical Terms for Media Studies'', eds. W.J.T. Mitchell and Mark B.N. Hansen, Chicago: University of Chicago Press, 2010, pp 157-171. | ||

| + | * Lydia H. Liu, "The Cybernetic Unconscious: Rethinking Lacan, Poe, and French Theory", ''Critical Inquiry'' 36:2 (Winter 2010), pp 288-320. [http://www.jstor.org/stable/10.1086/648527] | ||

| + | * Bernard Dionysus Geoghegan, [http://criticalinquiry.uchicago.edu/uploads/pdf/Geoghegan,_Theory.pdf "From Information Theory to French Theory: Jakobson, Levi-Strauss, and the Cybernetic Apparatus"], ''Critical Inquiry'' 38 (Autumn 2011), pp 96-126. | ||

| + | * Bernard Dionysus Geoghegan, ''[http://monoskop.org/log/?p=9200 The Cybernetic Apparatus: Media, Liberalism, and the Reform of the Human Sciences]'', Northwestern University and Bauhaus-Universität Weimar, 2012, 262 pp. Ph.D. Dissertation. | ||

| + | * Ronan Le Roux, [http://htl.linguist.univ-paris-diderot.fr/num3/leRoux.pdf "Lévi-Strauss, Lacan et les mathématiciens"], ''Les dossiers de HEL'' 3, Paris" SHESL, 2013. {{fr}} | ||

| − | : | + | ====In other fields==== |

| + | * Donna Haraway, "The High Cost of Information in Post World War II Evolutionary Biology: Ergonomics, Semiotics, and the Sociobiology of Communications Systems", ''Philosophical Forum'' 13:2-3 (Winter/Spring 1981-82), pp 244-278. | ||

| + | * Donna Haraway, "Signs of Dominance: From a Physiology to a Cybernetics of Primate Society, C.R. Carpenter, 1930-1970", in ''Studies in History of Biology, Vol. 6'', eds. William Coleman and Camille Limoges, Johns Hopkins University Press, 1982, pp 129-219. | ||

| + | * Philip Mirowski, "What Were von Neumann and Morgenstern Trying to Accomplish?", in ''Toward a History of Game Theory'', ed. E.R. Weintraub, Duke University Press, 1992, pp. 113-147. Information theory in economics. | ||

| + | * Evelyn Fox Keller, ''Refiguring Life: Metaphors of Twentieth-Century Biology'', Columbia University Press, 1995, pp 81-118. Information theory in embryology. | ||

| + | * Lily E. Kay, "Cybernetics, Information, Life: The Emergence of Scriptural Representations of Heredity", ''Configurations'' 5:1 (Winter 1997), pp 23-91. Information theory in genetics. [http://muse.jhu.edu/journals/configurations/v005/5.1kay.html] | ||

| + | * Philip Mirowski, "Cyborg Agonistes: Economics Meets Operations Research in Mid-Century", ''Social Studies of Science'' 29:5 (1999), pp. 685-718. Information theory in economics. | ||

| + | * Lily E. Kay, [http://cogsci.ucd.ie/Connectionism/Articles/mccullochKay.pdf "From Logical Neurons to Poetic Embodiments of Mind: Warren S. McCulloch’s Project in Neuroscience"], ''Science in Context'' 14:15 (2001), pp 591-614. Information theory in neuroscience. | ||

| + | * Jennifer S. Light, ''From Warfare to Welfare: Defense Intellectuals and Urban Problems in Cold War'', Johns Hopkins University Press, 2003. Information theory in urban planning. | ||

| + | * Jérôme Segal, [http://jerome-segal.de/Publis/HPLS.rtf "The Use of Information Theory in Biology: a Historical Perspective"], ''History and Philosophy of the Life Sciences'' 25:2 (2003), pp 275-281. [http://www.jstor.org/stable/23332432] | ||

| + | * Jérôme Segal, "L'information et le vivant: aléas de la métaphore informationnelle", in ''Le zéro et le un: histoire de la notion scientifique d’information au 20e siécle'', Paris: Syllepse, 2003, pp 487-534; 2011. {{fr}}. Information theory in genetics and biology. [http://www.syllepse.net/index.phtml?iprod=210&ForcerVueProduit=1&FromForcerV] | ||

| + | {{refend}} | ||

| − | + | ===Indirectly related prior work=== | |

| + | * [[Jacques Lafitte]], ''Réflexions sur la science des machines'' [Reflections on the Science of Machines], 1911-32. | ||

| + | * [[Marian Smoluchowski]], "Experimentell nachweisbare, der Ublichen Thermodynamik widersprechende Molekularphenomene", ''Phys. Zeitshur.'' 13, 1912. Connecting the problem of Maxwell's Demon with that of Brownian motion, Smoluchowski wrote that in order to violate the second principle of thermodynamics, the Demon had to be "taught" [unterrichtet] regarding the speed of molecules. Wiener mentions him in passing in his ''Cybernetics'' (1948) [http://books.google.com/books?id=NnM-uISyywAC&pg=PA70]. | ||

| + | * [[Hermann Schmidt]], general regulatory theory [Allgemeine Regelungskunde], 1941-54. | ||

| − | + | ==See also== | |

| + | * [[Cybernetics]] | ||

| + | * [[Claude Shannon]] | ||

| − | + | ==Links== | |

| − | + | * [http://www.ieeeghn.org/wiki/index.php/Category:Information_theory Information theory in IEEE Global History Network] | |

| − | + | * [http://plato.stanford.edu/entries/information/ Information in Stanford Encyclopedia of Philosophy] | |

| − | of | + | * [http://en.wikipedia.org/wiki/A_Mathematical_Theory_of_Communication A Mathematical Theory of Communication at Wikipedia] |

| + | * [http://en.wikipedia.org/wiki/History_of_information_theory History of information theory at Wikipedia] | ||

| + | * [http://en.wikipedia.org/wiki/Information_theory Information theory at Wikipedia] | ||

| + | * [http://en.wikipedia.org/wiki/Macy_Conferences Macy Conferences at Wikipedia] | ||

| − | |||

| − | + | {{Humanities}} | |

| − | + | {{featured_article}} | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Revision as of 01:10, 14 January 2019

Information theory is a branch of applied mathematics, electrical engineering, and computer science which originated primarily in the work of Claude Shannon and his colleagues in the 1940s. It deals with concepts such as information, entropy, information transmission, data compression, coding, and related topics. Paired with simultaneous developments in cybernetics, and despite the criticism of many, it has been subject to wide-ranging interpretations and applications outside of mathematics and engineering.

This page outlines a bibliographical genealogy of information theory in the United States, France, Soviet Union, and Germany in the 1940s and 1950s, followed by a selected bibliography on its impact across the sciences.

Contents

- 1 Bibliography

- 1.1 Shannon's information theory (1948)

- 1.2 Wiener's cybernetics (1948)

- 1.3 Macy Conferences on Cybernetics (1946-1953)

- 1.4 Information theory and cybernetics in France (1950s)

- 1.5 Information theory and cybernetics in the Soviet Union (1950s)

- 1.6 Information theory and cybernetics in Germany (1950s)

- 1.7 Popularisation of information theory in the United States (1950s)

- 1.8 Debate in Transactions (1955-56)

- 1.9 Historical analysis and review of the impact of information theory

- 1.10 Indirectly related prior work

- 2 See also

- 3 Links

Bibliography

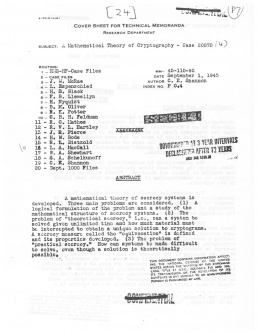

Claude E. Shannon, A Mathematical Theory of Cryptography, 1945. View online.

Claude E. Shannon, A Mathematical Theory of Communication, 1948. Download (monograph).

Claude E. Shannon, Warren Weaver, The Mathematical Theory of Communication, 1949. Download (1963 edition).

Shannon's information theory (1948)

- Harry Nyquist, "Certain Factors Affecting Telegraph Speed", Journal of the AIEE 43:2 (February 1924), pp 124-130; repr. in Bell System Technical Journal, Vol. 3 (April 1924), pp 324-346. Presented at the Midwinter Convention of the AIEE, Philadelphia, February 1924. Shows that a certain bandwidth was necessary in order to send telegraph signals at a definite rate. Considers two fundamental factors for the maximum speed of transmission of 'intelligence' [not information] by telegraph: signal shaping and choice of codes. Used in Shannon 1948.

- Harry Nyquist, "Certain Topics in Telegraph Transmission Theory", Transactions of AIEE, Vol. 47 (April 1928), pp 617-644; repr. in Proceedings of the IEEE 90:2 (February 2002), pp 280-305. Presented at the Winter Convention of the AIEE in New York in February 1928. Argues for the steady-state system over the method of transients for determining the distortion of telegraph signals. In this and his 1924 paper, Nyquist determines that the number of independent pulses that could be put through a telegraph channel per unit time is limited to twice the bandwidth of the channel; this rule is essentially a dual of what is now known as the Nyquist–Shannon sampling theorem. Used in Shannon 1948.

- Ralph V.L. Hartley, "Transmission of Information", Bell System Technical Journal 7:3 (July 1928), pp 535-563. Presented at the International Congress of Telegraphy and Telephony, Lake Como, Italy, September 1927. Uses the word information as a measurable quantity, and opts for logarithmic function as its measure, when the information in a message is given by the logarithm of the number of possible messages: H = n log S, where S is the number of possible symbols, and n the number of symbols in a transmission. Used in Shannon 1948.

- Claude E. Shannon, A Mathematical Theory of Cryptography, Memorandum MM 45-110-02, Bell Laboratories, 1 September 1945, 114 pages + 25 figures; repr. in Shannon, Miscellaneous Writings, eds. N.J.A. Sloane and Aaron D. Wyner, AT&T Bell Laboratories, 1993. Classified. Redacted and pubished in 1949 (see below). Shannon's first lengthy treatise on the transmission of "information." [1]

- Claude E. Shannon, "A Mathematical Theory of Communication", Bell System Technical Journal 27 (July, October 1948), pp 379-423, 623-656. Reprinted as Monograph B-1598, Bell Telephone System Technical Publications, 83 pp; repr. December 1957.

- "Statisticheskaia teoriia peredachi elektricheskikh signalov", in Teoriya peredakhi elektrikheskikh signalov pri nalikhii pomekh, ed. Nikolai A. Zheleznov, Moscow: IIL, 1953. (in Russian, details below)

- "Matematicheskaya teoriya svyazi", trans. S. Karpov, in Raboty po teorii informatsii i kibernetike, Moscow: IIL, 1963, pp 243-332. (in Russian, details below)

- Robert M. Fano, The Transmission of Information, Technical Reports No. 65 (17 March 1949) and No. 149 (6 February 1950), Research Laboratory of Electronics, MIT. A similar coding technique like Shannon's, only deducted differently. In 1952 optimised by his student, Huffman (see below).

- Warren Weaver, "The Mathematics of Communication", Scientific American 181:1 (July 1949), pp 11-15; repr. in Basic Readings in Communication Theory, ed. C. David Mortensen, Harper & Row, 1973, pp 27-38. [2]

- Claude E. Shannon, Warren Weaver, The Mathematical Theory of Communication, Urbana: University of Illinois Press, 1949, 117 pp; 1963; 1969; 1971; 1972; 1975; 1998. Reviews: Hockett (1953, EN). Consists of two texts: Shannon's 1948 paper (pp 3-91), and Weaver's "Recent contributions to the mathematical theory of communication", an edited version of his July 1949 paper (pp 95-117), and . [3] [4]

- コミュニケーションの数学的理論, trans. Atsushi Hasegawa, 明治図書, 1969; 2001. (Japanese)

- La teoria matematica delle comunicazioni, trans. Paolo Cappelli, Milan: Etas Kompass, 1971. (Italian)

- Mathematische Grundlagen der Informationstheorie, Munich and Vienna: Oldenbourg, 1976. (German). Contents.

- 通信の数学的理論, trans. Uematsu Tomohiko, 筑摩書房, 2009, 231 pp. (Japanese)

- Claude E. Shannon, "Communication Theory of Secrecy Systems", Bell System Technical Journal 28 (October 1949), pp 656-715; repr. as a monograph, New York: American Telegraph and Telephone Company, 1949. Unclassified revision of Shannon's memorandum A Mathematical Theory of Cryptography, 1945, which was still classified (until 1957). [5]

- "Teoriya svyazi v sekretnykh sistemakh" [Теория связи в секретных системах], trans. С. Карпов, in Raboty po teorii informatsii i kibernetike [Работы по теории информации и кибернетике], Moscow: IIL (ИИЛ), 1963, pp 333-402. (Russian) [6]

- David A. Huffman, A Method for the Construction of Minimum-Redundancy Codes", Proceedings of the I.R.E. 40 (September 1952), pp 1098–1102. Fano's student; developed an algorithm for efficient encoding of the output of a source. Later became popular in compression tools.

- Claude E. Shannon, "Information Theory", Seminar Notes, MIT, from 1956, in Shannon, Miscellaneous Writings, eds. N.J.A. Sloane and Aaron D. Wyner, AT&T Bell Laboratories, 1993.

Wiener's cybernetics (1948)

Norbert Wiener, Cybernetics: or Control and Communication in the Animal and the Machine, 1948. DJVU (Second edition, 1965).

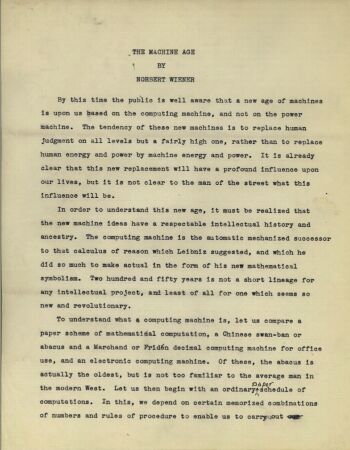

Norbert Wiener, "The Machine Age", [1949]. PDF (v3).

Norbert Wiener, The Human Use of Human Beings: Cybernetics and Society, 1950. PDF (1989 edition).

- Norbert Wiener, The Extrapolation, Interpolation, and Smoothing of Stationary Time Series, NDRC Report, MIT, February 1942. Classified (ordered by Warren Weaver, then the head of Section D-2), printed in 300 copies. Nicknamed "Yellow Peril". Published in 1949 (see below). Shannon 1948 mentions it as containing "the first clear-cut formulation of communication theory as a statistical problem, the study of operations on time series. This work, although chiefly concerned with the linear prediction and filtering problem, is an important collateral reference in connection with the present paper" (p 626-7). [7], Commentary.

- Norbert Wiener, Cybernetics: or Control and Communication in the Animal and the Machine, Paris: Hermann & Cie, 1948; Cambridge, MA: Technology Press (MIT), 1948; New York: John Wiley & Sons, 1948, 194 pp; 2nd ed., MIT Press, and Wiley, 1961, 212 pp, PDF, ARG; repr., forew. Doug Hill and Sanjoy K. Mitter, MIT Press, 2019, xlvii+303 pp, EPUB. Reviews: Dubarle (1948, FR), Littauer (1949), MacColl (1950). In the spring of 1947, Wiener was invited to a congress on harmonic analysis, held in Nancy, France and organized by the bourbakist mathematician, Szolem Mandelbrojt. During this stay in France Wiener received the offer to write a manuscript on the unifying character of this part of applied mathematics, which is found in the study of Brownian motion and in telecommunication engineering. The following summer, back in the United States, Wiener decided to introduce the neologism ‘cybernetics’ into his scientific theory. According to Pierre De Latil, MIT Press tried their best to prevent the publication of the book in France, since Wiener, then professor at MIT, was bound to them by contract. As a representative of Hermann Editions, M. Freymann managed to find a compromise and the French publisher won the rights to the book. Having lived together in Mexico, Freymann and Wiener were friends and it is Freymann who is supposed to have suggested that Wiener write this book. Benoît Mandelbrot and Walter Pitts proofread the manuscript. [8]

- N. Viner (Н. Винер), Kibernetika, ili upravlenie i svyaz v zhivotnom i mashine [Кибернетика, или Управление и связь в животном и машине], trans. G.N. Povarov, Moscow: Sovetskoe radio [Советское радио], 1958, 216 pp; new ed., 1963; 2nd ed., 1968. (Russian)

- Kybernetik. Regelung und Nachrichtenübertragung in Lebewesen und Maschine, rororo, 1968; Econ, 1992. (German)

- La cybernétique. Information et régulation dans le vivant et la machine, trans. Ronan Le Roux, Robert Vallée and Nicole Vallée-Lévi, Paris: Seuil, 2014, 376 pp. [9] (French)

- more translations

- Norbert Wiener, "Cybernetics", Scientific American 179:5, Nov 1948, pp 14-19. Adapted from his 1948 book. [10]

- Norbert Wiener, The Extrapolation, Interpolation, and Smoothing of Stationary Time Series with Engineering Applications, Cambridge, MA: Technology Press (MIT), 1949; New York: John Wiley & Sons, 1949; London: Chapman & Hill, 1949; 2nd ed., MIT Press, 1966. Earlier printed as a classified NDRC "yellow peril" Report, MIT, 1942. Uses Gauss's method of shaping the characteristic of a detector to allow for the maximal recognition of signals in the presence of noise; later known as the "Wiener filter." Review: Tukey (1952).

- Norbert Wiener, "The Machine Age", [1949]. Unpublished. Written for The New York Times.

- Norbert Wiener, The Human Use of Human Beings: Cybernetics and Society, Boston, MA: Houghton Mifflin, 1950, 241 pp; 2nd ed., 1954; London: Eyre and Spottiswode, 1954; New York: Avon Books, 1967; New York: Da Capo Press, 1988; repr., intro. Steve J. Heims, London: Free Association Books, 1989, xxx+199 pp; new ed., 1990.

- Cybernétique et société: l'usage humain des êtres humains, Paris: Union Générale d'Éditions, 1952; 1971; repr., 2014. (French)

- Mensch und Menschmaschine, Frankfurt am Main: Metzner, 1952; 4th ed., 1972. (German)

- Kibernetika i obshchestvo [Кибернетика и общество], trans. E.G. Panfilov, Moscow: IIL, 1958, 200 pp. (Russian)

- more translations

Macy Conferences on Cybernetics (1946-1953)

- Cybernetics: Transactions of the Sixth Conference, New York: Josiah Macy, Jr. Foundation, 1949.

- Cybernetics: Transactions of the Seventh Conference, eds. Heinz von Foerster, Margaret Mead, and Hans Lukas Teuber, New York: Josiah Macy, Jr. Foundation, 1950.

- Cybernetics: Transactions of the Eighth Conference, eds. Heinz von Foerster, Margaret Mead, and Hans Lukas Teuber, New York: Josiah Macy, Jr. Foundation, 1952.

- Cybernetics: Transactions of the Ninth Conference, eds. Heinz von Foerster, Margaret Mead, and Hans Lukas Teuber, New York: Josiah Macy, Jr. Foundation, 1953.

- Cybernetics: Transactions of the Tenth Conference, eds. Heinz von Foerster, Margaret Mead, and Hans Lukas Teuber, New York: Josiah Macy, Jr. Foundation, 1955.

- Cybernetics | Kybernetik 1: The Macy-Conferences 1946–1953. Band 1. Transactions/Protokolle, ed. Claus Pias, Zürich/Berlin: Diaphanes, 2003, 736 pp. [11] [12] (German),(English)

- Cybernetics | Kybernetik 2: The Macy-Conferences 1946–1953. Band 2. Documents/Dokumente, ed. Claus Pias, Zürich: diaphanes, 2004, 512 pp. Introduction. [13] (German),(English)

- Cybernetics: The Macy-Conferences 1946-1953: The Complete Transactions, ed. Claus Pias, Zürich: Diaphanes, 2016, 734 pp.

Information theory and cybernetics in France (1950s)

Raymond Ruyer, La cybernétique et l'origine de l'information, 1954. PDF.

- Norbert Wiener, Cybernetics: or Control and Communication in the Animal and the Machine, Paris: Hermann & Cie, Cambridge, MA: Technology Press, and New York: John Wiley & Sons, 1948, 194 pp. Review: Dubarle (1948, FR).

- Dominique Dubarle, "Idées scientifiques actuelles et domination des faits humains", Esprit 9:18 (1950), pp 296-317. (French)

- Louis de Broglie (ed.), La Cybernétique: théorie du signal et de l'information, Paris: Éditions de la Revue d'optique théorique et instrumentale, 1951, 318 pp. Contributors include physicists and mathematicians Robert Fortet, M.D. Indjoudjian, A. Blanc-Lapierre, P. Aigrain, J. Oswald, Dennis Gabor, Jean Ville, Pierre Chavasse, Serge Colombo, Yvon Delbord, Jean Icole, Pierre Marcou, and Edouard Picault. [14] (French)

- Norbert Wiener, Cybernétique et société, l'usage humain des êtres humains [1950], Paris: Deux-Rives, 1952; 2nd ed., Paris: 10-18, 1962; 1971. (French)

- Louis Couffignal, Les machines à penser, Paris: Minuit, 1952, 153 pp; 2nd ed., rev., 1964, 133 pp. (French)

- Denkmaschinen, trans. Elisabeth Walther with Max Bense, intro. Max Bense, Stuttgart: Klipper, 1955, 186 pp; repr., 1965. (German)

- Colloques Internationaux du Centre National de la Recherche Scientifique 47 (1953). Proceedings of a congress held in Paris in January 1951. Paul Chauchard: the congress was "the first manifestation in France of the young cybernetics, with the participation of N. Wiener, the father of this science." For this congress, organised by the French scientists Louis Couffignal and Pérès, both of whom had visited the U.S. laboratories, and sponsored by the Rockefeller Foundation, a number of foreigners were invited, including Howard Aiken, Warren McCulloch, Maurice Wilkes, Grey Walter, Donald MacKay and Ross Ashby, along with Wiener who was staying in Paris for a couple of months at the Collège de France. 300 people attended; 38 papers were presented; 14 machines from six different countries were demonstrated. [15] (French)

- Pierre de Latil, La pensée artificielle: introduction à la cybernétique, Paris: Gallimard, 1953. (French)

- Thinking by Machine: A Study of Cybernetics, trans. Y.M. Golla, et al., London: Sidgwick & Jackson, 1956, xiii+353 pp; repr., Boston: Houghton Mifflin, 1957, 353 pp. (English)

- El Pensamiento artificial: introducción a la cibernética, trans. Alberto Luis Bixio, Buenos Aires: Losada, 1958, 366 pp. (Spanish)

- O Pensamento artificial: introdução a cibernetica, trans. Jeronimo Monteiro, São Paulo: Ibrasa, 1959; 2nd ed., 1968, 337 pp; 3rd ed., 1973. (Brazilian Portuguese)

- Il pensiero artificiale : introduzione alla cibernetica, trans. Delfo Ceni, Milan: Feltrinelli, 1962, 397 pp. (Italian)

- Benoît Mandelbrot, Contributions à la théorie mathématique des jeux de communications, Institut de Statistiques de l'Université de Paris 2, 1953. Ph.D. dissertation in mathematics making a connection between game theory and information theory. He showed for instance that both thermodynamics and statistical structures of language can be explained as results of minimax games between ‘nature’ and ‘emitter’. He also made the connection between the definitions of information given by the British statistician Ronald A. Fisher in the 1920s, by the physicist Dennis Gabor in 1946 and the already well-known definition proposed by Shannon. Mandelbrot was at MIT from 1952-1954 and later at the IAS in Princeton. [16] (French)

- Raymond Ruyer, La cybernétique et l'origine de l'information, Paris: Flammarion, 1954. (French)

- G.Th. Guilbaud, La cybernétique, Paris: PUF, 1954, 135 pp. (French)

- La Cibernética, Barcelona: Vergara, 1956, 225 pp. (Spanish)

- What Is Cybernetics?, trans. Valerie Mackay, London: Heinemann, 1959, 126 pp. (English)

- Cybernetik , Aldus/Bonniers, 1962, 104 pp; repr., 1966. (Swedish)

- Marcel-Paul Schützenberg, "Contributions aux applications statistiques de la théorie de l’information", Publications de l'Institut de Statistique de l'Université de Paris 3:1-2 (1954), pp 3-117; repr. in Œuvres complètes, Tome 3: 1953-1955, Paris: Institut Gaspard-Monge, Université Paris-Est, 2009, pp 56-161. Ph.D. Dissertation defended at the Faculté des Sciences in Paris in June 1953. (French)

- Paul Cossa, La cybernétique: du cerveau humain aux cerveaux artificiels, Paris: Masson, 1955, 98 pp; 2nd ed., rev., 1957, 102 pp. (French)

- Pol Kossa (Поль Косса), Kibernetika: «Ot chelovecheskogo mozga k mozgu iskusstvennomu» [Кибернетика: «От человеческого мозга к мозгу искусственному»], trans. P.K. Anokhin, Moscow: Izdatelstvo inostrannoy literatury, 1958, 122 pp. Trans. of 2nd ed. (Russian)

- Cibernética: del cerebro humano a los cerebros artificiales, Barcelona: Reverté, 1963, 74 pp. (Spanish)

- Marcel-Paul Schützenberg, "La théorie de l'information", in Cahiers d'actualité et de synthèse, Encyclopédie française, Paris: Société Nouvelle de l'Encyclopédie Française, 1957, pp 9-21. (French)

Information theory and cybernetics in the Soviet Union (1950s)

Liapunov, Kitov, Sobolev, "Osnovnye cherty kibernetiki", 1955. View online.

A.N. Kolmogorov, Teoriya peredachi informatsii, 1956. DJVU.

- Claude Shannon (Клод Шеннон), "Statisticheskaia teoriia peredachi elektricheskikh signalov" [Статистическая теория передачи электрических сигналов; The Statistical Theory of Electrical Signal Transmission] [1948], in Teoriya peredakhi elektrikheskikh signalov pri nalikhii pomekh [Теория передачи электрических сигналов при наличии помех], ed. Nikolai A. Zheleznov (А. Н. Железнов), Moscow: Izdatelstvo inostrannoi literatury (ИИЛ), 1953, pp 7-87. The editor rid the work of the words information, communication, and mathematical entirely, put entropy in quotation marks, and substituted data for information throughout the text. He also assured the reader that Shannon’s concept of entropy had nothing to do with physical entropy and was called such only on the basis of "purely superficial similarity of mathematical formulae". [17] (Russian)

- "Matematicheskaya teoriya svyazi" [Математическая теория связи] [1948], trans. S. Karpov, in Raboty po teorii informatsii i kibernetike [Работы по теории информации и кибернетике], Moscow: Izdatelstvo inostrannoi literatury (ИИЛ), 1963, pp 243-332. (Russian)

- Aleksei Liapunov, Anatolii Kitov, Sergei Sobolev, "Osnovnye cherty kibernetiki" [Основные черты кибернетики; Basic Features of Cybernetics], Voprosy filosofii [Вопросы философии] 141:4, Jul-Aug 1955, pp 136-148. The first Soviet article speaking positively about cybernetics and non-technical applications of information theory, authored by three specialists in military computing—Liapunov, a noted mathematician and the creator of the first Soviet programming language; Kitov, an organizer of the first military computing centers; and Sobolev, the deputy head of the Soviet nuclear weapons program in charge of the mathematical support. They presented cybernetics as a general "doctrine of information", of which Shannon’s theory of communication was but one part. The three authors interpreted the notion of information very broadly, defining it as "all sorts of external data, which can be received and transmitted by a system, as well as the data that can be produced within the system." Under the rubric of "information" fell any environmental influence on living organisms, any knowledge acquired by man in the process of learning, any signals received by a control device via feedback, and any data processed by a computer. [18] [19] (Russian)

- Ernst Kolman (Э. Кольман), "Chto takoe kibernetika?" [Что такое кибернетика?], Voprosy filosofii [Вопросы философии] 141:4, Jul-Aug 1955, pp 148-159; repr. in Filosofskiye voprosy sovremennoj fiziki [Философские вопросы современной физики], ed. I.V. Kuznetsov, Moscow: Gospolitizdat, 1958, pp 222-247. Lecture defending cybernetics, delivered at the Academy of Social Sciences in November 1954. (Russian)

- A.N. Kolmogorov (А. Н. Колмогоров), Teoriya peredachi informatsii [Теория передачи информации], Мoscow, 1956. (Russian)

- Ernst Kolman (Э. Кольман), Kibernetika [Кибернетика], Moscow: Znanie (Знание), 1956, 39 pp. (Russian)

- Claude Shannon (К.Э. Шеннон), John McCarthy (Дж. Маккарти) (eds.), Avtomaty. Sbornik statey [Автоматы. Сборник статей], trans. A.A. Liapunov, et al., Moscow: Izdatelstvo inostrannoy literatury (Издательство иностранной литературы), Nov 1956, 404 pp. Trans. of a collection of papers on automata theory, Automata Studies (Princeton University Press, 1956); with texts by John von Neumann, Marvin Minsky, Stephen Cole Kleene, et al. [20] (Russian)

- Igor’ A. Poletaev, Signal: O nekotorykh poniatiiakh kibernetiki [Сигнал: О некоторых понятиях кибернетики], Moscow: Sovetskoe radio, 1958, 413 pp. The first Soviet book on cybernetics. (Russian)

- Pol Kossa (Поль Косса), Kibernetika: «Ot chelovecheskogo mozga k mozgu iskusstvennomu» [Кибернетика: «От человеческого мозга к мозгу искусственному»] [1957], trans. P.K. Anokhin, Moscow: Izdatelstvo inostrannoy literatury, 1958, 122 pp. Trans. of 2nd ed. (Russian)

- A.N. Kolmogorov (А. Н. Колмогоров), "Kibernetika" [Кибернетика], in Bolshaya sovetskaya entsiklopediya, t. 51 [Большая советская энциклопедия], 2nd ed., Moscow: BSE (БСЭ), 1958, pp 149-151.

- Problemy kibernetiki [Проблемы кибернетики], ed. A.A. Glushkov (А.А. Ляпунов), et al., Moscow, 1958-1984. Journal. (Russian)

- Z.I. Rovenskiy (З.И. Ровенский), A.I. Uyemov (А.И. Уемов), Ye.A. Uyemova (Е.А. Уемова) (eds.), Mashina i mysl: filosofskiy ocherk o kibernetike [Машина и мысль: философский очерк о кибернетике], Moscow: Gospolitizdat (Госполитиздат), 1960, 143 pp. (Russian)

- O.B. Lupanov (ed.), Kiberneticheskiy sbornik, t. 1 [Кибернетический сборник], Mir (Мир), 1960, 289 pp, PDF. [21] (Russian)

- A.I. Berg (А.И. Берг) (ed.), Kibernetika na sluzhbe kommunizma. Sbornik statey [Кибернетика на службе коммунизма. Сборник статей], 1961, 312 pp. (Russian)

- Bibliography

- David Dinsmore Comey, "Soviet Publications on Cybernetics", Studies in Soviet Thought 4:2, 1964, pp 142-161. [22]

- Lee R. Kerschner, "Western Translations of Soviet Publications on Cybernetics", Studies in Soviet Thought 4:2, 1964, pp 162-177. [23]

Information theory and cybernetics in Germany (1950s)

- Nobert Wiener, Mensch und Menschmaschine [1950], trans. Gertrud Walther, Frankfurt am Main: Metzner, 1952, 211 pp; 4th ed., 1972. (German)

- Louis Couffignal, Denkmaschinen [1952], trans. Elisabeth Walther with Max Bense, intro. Max Bense, Stuttgart: Klipper, 1955, 186 pp; 1965. (German)

- Georg Klaus, "Vortrag über philosophische und gesellschaftliche Probleme der Kybernetik", 1957. Lecture. (German)

- Gotthard Günther, Das Bewusstsein der Maschinen: eine Metaphysik der Kybernetik, Krefeld, Baden-Baden: Agis, 1957; 2nd ed., 1963; 3rd ed. as Das Bewusstsein der Maschinen: eine Metaphysik der Kybernetik, mit einem Beitrag aus dem Nachlass: 'Erkennen und Wollen' , eds. Eberhard von Goldammer and Joachim Paul, Baden-Baden: Agis, 2002. [24] (German)

- Werner Meyer-Eppler, Grundlagen und Anwendungen der Informationstheorie, Berlin: Springer, 1959, xviii+446 pp; 2nd ed., eds. Georg Heike and K. Löhn, Berlin: Springer, 1969. Reviews: Tamm (1960), Billingsley (1961, EN), Adam (1965). (German)

- Georg Klaus, Kybernetik in philosophischer Sicht, East-Berlin: Dietz, 1961, 491 pp; rev ed., 1963; repr., 1965. Reviews: Stojanow (Deutsche Zeitschr Phil), Stock (Studies in Soviet Thought). (German)

- Георг Клаус, Kibernetika i filosofiya [Кибернетика и философия], trans. I.S. Dobronravov, A.P. Kupriyanov, and L.A. Leytes, Moscow: Изд. иностранной литературы, 1963, 530 pp. [25] (Russian)

- Cong zhe xue kan kong zhi lun [从哲学看控制论], trans. Zhi xue Liang, Beijing: Zhong guo she hui ke xue chu ban she, 1981, 462 pp. (Chinese)

Popularisation of information theory in the United States (1950s)

- Charles Eames, Ray Eames, A Communication Primer, 16 mm, 1953, 21 min. An educational film aimed at students, sponsored by IBM and distributed by Museum of Modern Art.

- Francis Bello, "The Information Theory", Fortune, Vol. 48 (December 1953), pp 136-158.

- The Search, 1954. Documentary film featuring Shannon, Forrester and Wiener, produced by NBC.

- Stanford Goldman, Information Theory, Prentice-Hall, 1953, 385 pp; New York: Dover, 1968; 2005.

- S. Goldman (С. Голдман), Teoriya informatsii [Теория информации], Мoscow, 1957. (Russian)

- Léon Brillouin, Science and Information Theory, New York: Academic Press, 1956. A bestseller rewrite of physics using information theory.

- Information and Control journal, *1958. Founding editors: Léon Brillouin, Colin Cherry, Peter Elias.

Claude E. Shannon, "The Bandwagon", 1956. Download.

Debate in Transactions (1955-56)

- L.A. De Rosa, "In Which Fields Do We Graze?", I.R.E. Transactions on Information Theory 1 (December 1955). Editorial by the chairman of the Professional Group on Information Theory: "The expansion of the applications of Information Theory to fields other than radio and wired communications has been so rapid that oftentimes the bounds within which the Professional Group interests lie are questioned. Should an attempt be made to extend our interests to such fields as management, biology, psychology, and linguistic theory, or should the concentration be strictly in the direction of communication by radio or wire?"

- Claude E. Shannon, "The Bandwagon", I.R.E. Transactions on Information Theory 2 (1956), p 3. Shannon's call for keeping the information theory "an engineering problem": "Workers in other fields should realize that the basic results of the subject are aimed in a very specific direction, a direction that is not necessarily relevant to such fields as psychology, economics, and other social sciences.. [T]he establishing of such applications is not a trivial matter of translating words to a new domain, but rather the slow tedious process of hypothesis and verification. If, for example, the human being acts in some situations like an ideal decoder, this is an experimental and not a mathematical fact, and as such must be tested under a wide variety of experimental situations."

- "Bandvagon" [Бандвагон], trans. S. Karpov, in Raboty po teorii informatsii i kibernetike [Работы по теории информации и кибернетике], Moscow: IIL (ИИЛ), 1963, pp 667-668. (Russian)

- Norbert Wiener, "What Is Information Theory?", I.R.E. Transactions on Information Theory 3 (June 1956), p 48. A rejection of Shannon's narrowing focus and insistance that "information" remain part of a larger indissociable ensemble including all the sciences: "I am pleading in this editorial that Information Theory...return to the point of view from which it originated: that of the general statistical concept of communication.. What I am urging is a return to the concepts of this theory in its entirety rather than the exaltation of one particular concept of this group, the concept of the measure of information into the single dominant idea of all."

Historical analysis and review of the impact of information theory

In mathematics, engineering and computing

- Written by engineers and mathematicians

- Colin Cherry, "A History of the Theory of Information", IRE Transactions on Information Theory 1:1 (1953), pp 22-43.

- Louis H. M. Stumpers, "A Bibliography on Information Theory (Communication Theory - Cybernetics)", I.R.E. Transactions on Information Theory 1 (1955), pp 31-47.

- John Pierce, "The Early Days of Information Theory", IEEE Transactions of Information Theory 19:1 (January 1973), pp 3-8. [26]

- William Aspray, "Scientific Conceptualization of Information: A Survey", Annals of the History of Computing 7:2 (April 1985), pp 117-140.

- Karl L. Wildes, Nilo A. Lindgren, "The Research Laboratory of Electronics", in A Century of Electrical Engineering and Computer Science at MIT, 1882-1982, MIT Press, 1985, p 242-278.

- E.M. Rogers, T.W. Valente, "A History of Information Theory in Communication", in Between Communication and Information, Vol. 4: Information and Behavior, eds. J.R. Schement and B.D. Ruben, New Brunswick, NJ: Transaction, 1993, pp 35-56.

- Sergio Verdú, "Fifty Years of Shannon Theory", IEEE Transactions on Information Theory 44:6 (1998), pp 2057-2078.

- Ronald R. Kline, "What Is Information Theory a Theory Of? Boundary Work Among Scientists in the United States and Britain During the Cold War", in The History and Heritage of Scientific and Technical Information Systems: Proceedings of the 2002 Conference, Chemical Heritage Foundation, eds. W. Boyd Rayward and Mary Ellen Bowden, Medford, NJ: Information Today, 2004, pp 15-28.

- Lav Varshney, "Engineering Theory and Mathematics in the Early Development of Information Theory", IEEE Conf. History of Electronics, 2004, pp 1-6.

- Ya.I. Fet (ed.), Iz istorii kibernetiki, Novosibirsk: Geo, 2006, 339 pp. (Russian)

N. Katherine Hayles, How We Became Posthuman: Virtual Bodies in Cybernetics, Literature, and Informatics, 1999. Download.

- Written by historians and theorists

- Peter Galison, "The Ontology of the Enemy: Norbert Wiener and the Cybernetic Vision", Critical Inquiry 21:1 (Autumn 1994), pp 228-266. Demonstrates the significance of the war effort of devising a servomechanical shooting device, in which Wiener participated, as a defining moment in the elaboration of the cybernetic model.

- N. Katherine Hayles, How We Became Posthuman: Virtual Bodies in Cybernetics, Literature, and Informatics, University of Chicago Press, 1999, 350 pp.

- Jean-Pierre Dupuy, The Mechanization of the Mind: On the Origins of Cognitive Science, trans. M. B. Debevoise, Princeton University Press, 2000, 240 pp. A survey of the Macy conferences. Review, [27].

- David A. Mindell, Between Human and Machine: Feedback, Control, and Computing before Cybernetics, Johns Hopkins University Press, 2002, 439 pp. [28]

- Slava Gerovitch, "'Russian Scandals': Soviet Readings of American Cybernetics in the Early Years of the Cold War", The Russian Review 60 (October 2001), pp 545-568.

- David Mindell, Jérôme Segal, Slava Gerovitch, "From Communications Engineering to Communications Science: Cybernetics and Information Theory in the United States, France, and the Soviet Union", in Science and Ideology: A Comparative History, ed. Mark Walker, London and New York: Routledge, 2002, pp 66-96. [29]

- Jérôme Segal, Le zéro et le un: histoire de la notion scientifique d’information au 20e siécle, Paris: Syllepse, 2003, 906 pp; 2011. (French) Contents, [30], Review, Review.

- Slava Gerovitch, From Newspeak to Cyberspeak: A History of Soviet Cybernetics, MIT Press, 2004, 383 pp.

- Yongdong Peng, "The Early Diffusion of Cybernetics in China (1929-1960)", Studies in the History of the Natural Sciences, vol. 23, 2004, pp. 299-318. (Chinese) [31] [32]

- Bernard Dionysius Geoghegan, "The Historiographic Conception of Information: A Critical Survey", The IEEE Annals on the History of Computing 30:1 (2008), pp 66-81.

- Mathieu Triclot, Le moment cybernétique: la constitution de la notion d’information, Seyssel: Champ Callon, 2008. (French)

- Axel Roch, Claude E. Shannon. Spielzeug, Leben und die geheime Geschichte seiner Theorie der Information, Berlin: gegenstalt, 2009, 256 pp; 2nd ed., 2010. (German) Excerpt, [33].

- Andrew Pickering, The Cybernetic Brain: Sketches of Another Future, Chicago University Press, 2010, 526 pp. Renders a history of British cybernetics with only brief excursions into information theory.

- Pierre-Éric Mounier-Kuhn, L’Informatique en France, de la seconde guerre mondiale au Plan Calcul. L’émergence d’une science, Paris: PUPS, 2010, 720 pp. (French) [34]

- James Gleick, The Information: A History, A Theory, A Flood, Knopf, 2011, 496 pp.

- Ronan Le Roux, Une histoire de la cybernétique en France, 1948-1970, Paris: Classiques Garnier, 2013. (French)

Bernard Dionysus Geoghegan, The Cybernetic Apparatus: Media, Liberalism, and the Reform of the Human Sciences, 2012, Log.

In the social sciences and humanities

- N. Katherine Hayles, "Information or Noise? Economy of Explanation in Barthes’s S/Z and Shannon’s Information Theory", in One Culture: Essays in Science and Literature, ed. George Levine, Madison: University of Wisconsin Press, 1987, pp 119-142. [35]

- Steve J. Heims, Constructing a Social Science for Postwar America: The Cybernetics Group (1946–1953), MIT Press, 1993. A survey of the Macy conferences and dissemination of information theory outside the natural sciences. Review, [36].

- Jérôme Segal, "Les sciences humaines et la notion scientifique d'information", in Le zéro et le un: histoire de la notion scientifique d’information au 20e siécle, Paris: Syllepse, 2003; 2011, pp 487-534. (French) [37]

- Céline Lafontaine, L’Empire cybernétique: des machines à penser à la pensée machine [The Cybernetic Empire: From Machines for Thinking to the Thinking Machine], Paris: Seuil, 2004. (French). Review.

- Céline Lafontaine, "The Cybernetic Matrix of 'French Theory'", Theory, Culture & Society 24:5 (2007), pp 27-46. On the influence of cybernetics on the development of French structuralism, post-structuralism and postmodern philosophy after WWII.

- Michael Hagner, Erich Hörl (eds.), Die Transformationen des Humanen, Suhrkamp, 2008, 450 pp. (German). Contents and Introduction, [38].

- Jürgen Van de Walle, "Roman Jakobson, Cybernetics and Information Theory: A Critical Assessment", Folia Linguistica Historica 29 (December 2008), pp 87-123.

- Ronan Le Roux, "Lévi-Strauss, une reception paradoxale de la cybernétique", L’Homme 189 (January-March 2009), pp 165-190. (French)

- Bruce Clarke, "Information", in Critical Terms for Media Studies, eds. W.J.T. Mitchell and Mark B.N. Hansen, Chicago: University of Chicago Press, 2010, pp 157-171.

- Lydia H. Liu, "The Cybernetic Unconscious: Rethinking Lacan, Poe, and French Theory", Critical Inquiry 36:2 (Winter 2010), pp 288-320. [39]

- Bernard Dionysus Geoghegan, "From Information Theory to French Theory: Jakobson, Levi-Strauss, and the Cybernetic Apparatus", Critical Inquiry 38 (Autumn 2011), pp 96-126.

- Bernard Dionysus Geoghegan, The Cybernetic Apparatus: Media, Liberalism, and the Reform of the Human Sciences, Northwestern University and Bauhaus-Universität Weimar, 2012, 262 pp. Ph.D. Dissertation.

- Ronan Le Roux, "Lévi-Strauss, Lacan et les mathématiciens", Les dossiers de HEL 3, Paris" SHESL, 2013. (French)

In other fields

- Donna Haraway, "The High Cost of Information in Post World War II Evolutionary Biology: Ergonomics, Semiotics, and the Sociobiology of Communications Systems", Philosophical Forum 13:2-3 (Winter/Spring 1981-82), pp 244-278.

- Donna Haraway, "Signs of Dominance: From a Physiology to a Cybernetics of Primate Society, C.R. Carpenter, 1930-1970", in Studies in History of Biology, Vol. 6, eds. William Coleman and Camille Limoges, Johns Hopkins University Press, 1982, pp 129-219.

- Philip Mirowski, "What Were von Neumann and Morgenstern Trying to Accomplish?", in Toward a History of Game Theory, ed. E.R. Weintraub, Duke University Press, 1992, pp. 113-147. Information theory in economics.

- Evelyn Fox Keller, Refiguring Life: Metaphors of Twentieth-Century Biology, Columbia University Press, 1995, pp 81-118. Information theory in embryology.

- Lily E. Kay, "Cybernetics, Information, Life: The Emergence of Scriptural Representations of Heredity", Configurations 5:1 (Winter 1997), pp 23-91. Information theory in genetics. [40]

- Philip Mirowski, "Cyborg Agonistes: Economics Meets Operations Research in Mid-Century", Social Studies of Science 29:5 (1999), pp. 685-718. Information theory in economics.

- Lily E. Kay, "From Logical Neurons to Poetic Embodiments of Mind: Warren S. McCulloch’s Project in Neuroscience", Science in Context 14:15 (2001), pp 591-614. Information theory in neuroscience.

- Jennifer S. Light, From Warfare to Welfare: Defense Intellectuals and Urban Problems in Cold War, Johns Hopkins University Press, 2003. Information theory in urban planning.

- Jérôme Segal, "The Use of Information Theory in Biology: a Historical Perspective", History and Philosophy of the Life Sciences 25:2 (2003), pp 275-281. [41]

- Jérôme Segal, "L'information et le vivant: aléas de la métaphore informationnelle", in Le zéro et le un: histoire de la notion scientifique d’information au 20e siécle, Paris: Syllepse, 2003, pp 487-534; 2011. (French). Information theory in genetics and biology. [42]

- Jacques Lafitte, Réflexions sur la science des machines [Reflections on the Science of Machines], 1911-32.

- Marian Smoluchowski, "Experimentell nachweisbare, der Ublichen Thermodynamik widersprechende Molekularphenomene", Phys. Zeitshur. 13, 1912. Connecting the problem of Maxwell's Demon with that of Brownian motion, Smoluchowski wrote that in order to violate the second principle of thermodynamics, the Demon had to be "taught" [unterrichtet] regarding the speed of molecules. Wiener mentions him in passing in his Cybernetics (1948) [43].

- Hermann Schmidt, general regulatory theory [Allgemeine Regelungskunde], 1941-54.

See also

Links

- Information theory in IEEE Global History Network

- Information in Stanford Encyclopedia of Philosophy

- A Mathematical Theory of Communication at Wikipedia

- History of information theory at Wikipedia

- Information theory at Wikipedia

- Macy Conferences at Wikipedia