As I write this in August 2015, we are in the middle of one of the worst

refugee crises in modern Western history. The European response to the carnage

beyond its borders is as diverse as the continent itself: as an ironic

contrast to the newly built barbed-wire fences protecting the borders of

Fortress Europe from Middle Eastern refugees, the British Museum (and probably

other museums) are launching projects to “protect antiquities taken from

conflict zones” (BBC News 2015). We don’t quite know how the conflict

artifacts end up in the custody of the participating museums. It may be that

asylum seekers carry such antiquities on their bodies, and place them on the

steps of the British Museum as soon as they emerge alive on the British side

of the Eurotunnel. But it is more likely that Western heritage institutions,

if not playing Indiana Jones in North Africa, Iraq, and Syria, are probably

smuggling objects out of war zones and buying looted artifacts from the

international gray/black antiquities market to save at least some of them from

disappearing in the fortified vaults of wealthy private buyers (Shabi 2015).

Apparently, there seems to be some consensus that artifacts, thought to be

part of the common cultural heritage of humanity, cannot be left in the hands

of those collectives who own/control them, especially if they try to destroy

them or sell them off to the highest bidder.

The exact limits of expropriating valuables in the name of humanity are

heavily contested. Take, for example, another group of self-appointed

protectors of culture, also collecting and safeguarding, in the name of

humanity, valuable items circulating in the cultural gray/black markets. For

the last decade Russian scientists, amateur librarians, and volunteers have

been collecting millions of copyrighted scientific monographs and hundreds of

millions of scientific articles in piratical shadow libraries and making them

freely available to anyone and everyone, without any charge or limitation

whatsoever (Bodó 2014b; Cabanac 2015; Liang 2012). These pirate archivists

think that despite being copyrighted and locked behind paywalls, scholarly

texts belong to humanity as a whole, and seek to ensure that every single one

of us has unlimited and unrestricted access to them.

The support for a freely accessible scholarly knowledge commons takes many

different forms. A growing number of academics publish in open access

journals, and offer their own scholarship via self-archiving. But as the data

suggest (Bodó 2014a), there are also hundreds of thousands of people who use

pirate libraries on a regular basis. There are many who participate in

courtesy-based academic self-help networks that provide ad hoc access to

paywalled scholarly papers (Cabanac 2015).[1] But a few people believe that

scholarly knowledge could and should be liberated from proprietary databases,

even by force, if that is what it takes. There are probably no more than a few

thousand individuals who occasionally donate a few bucks to cover the

operating costs of piratical services or share their private digital

collections with the world. And the number of pirate librarians, who devote

most of their time and energy to operate highly risky illicit services, is

probably no more than a few dozen. Many of them are Russian, and many of the

biggest pirate libraries were born and/or operate from the Russian segment of

the Internet.

The development of a stable pirate library, with an infrastructure that

enables the systematic growth and development of a permanent collection,

requires an environment where the stakes of access are sufficiently high, and

the risks of action are sufficiently low. Russia certainly qualifies in both

of these domains. However, these are not the only reasons why so many pirate

librarians are Russian. The Russian scholars behind the pirate libraries are

familiar with the crippling consequences of not having access to fundamental

texts in science, either for political or for purely economic reasons. The

Soviet intelligentsia had decades of experience in bypassing censors, creating

samizdat content distribution networks to deal with the lack of access to

legal distribution channels, and running gray and black markets to survive in

a shortage economy (Bodó 2014b). Their skills and attitudes found their way to

the next generation, who now runs some of the most influential pirate

libraries. In a culture, where the know-how of how to resist information

monopolies is part of the collective memory, the Internet becomes the latest

in a long series of tools that clandestine information networks use to build

alternative publics through the illegal sharing of outlawed texts.

In that sense, the pirate library is a utopian project and something more.

Pirate librarians regard their libraries as a legitimate form of resistance

against the commercialization of public resources, the (second) enclosure

(Boyle 2003) of the public domain. Those handful who decide to publicly defend

their actions, speak in the same voice, and tell very similar stories. Aaron

Swartz was an American hacker willing to break both laws and locks in his

quest for free access. In his 2008 “Guerilla Open Access Manifesto” (Swartz

2008), he forcefully argued for the unilateral liberation of scholarly

knowledge from behind paywalls to provide universal access to a common human

heritage. A few years later he tried to put his ideas into action by

downloading millions of journal articles from the JSTOR database without

authorization. Alexandra Elbakyan is a 27-year-old neurotechnology researcher

from Kazakhstan and the founder of Sci-hub, a piratical collection of tens of

millions of journal articles that provides unauthorized access to paywalled

articles to anyone without an institutional subscription. In a letter to the

judge presiding over a court case against her and her pirate library, she

explained her motives, pointing out the lack of access to journal articles.[2]

Elbakyan also believes that the inherent injustices encoded in current system

of scholarly publishing, which denies access to everyone who is not

willing/able to pay, and simultaneously denies payment to most of the authors

(Mars and Medak 2015), are enough reason to disregard the fundamental IP

framework that enables those injustices in the first place. Other shadow

librarians expand the basic access/injustice arguments into a wider critique

of the neoliberal political-economic system that aims to commodify and

appropriate everything that is perceived to have value (Fuller 2011; Interview

with Dusan Barok 2013; Sollfrank 2013).

Whatever prompts them to act, pirate librarians firmly believe that the fruits

of human thought and scientific research belong to the whole of humanity.

Pirates have the opportunity, the motivation, the tools, the know-how, and the

courage to create radical techno-social alternatives. So they resist the

status quo by collecting and “guarding” scholarly knowledge in libraries that

are freely accessible to all.

Both the curators of the British Museum and the pirate librarians claim to

save the common heritage of humanity, but any similarities end there. Pirate

libraries have no buildings or addresses, they have no formal boards or

employees, they have no budgets to speak of, and the resources at their

disposal are infinitesimal. Unlike the British Museum or libraries from the

previous eras, pirate libraries were born out of lack and despair. Their

fugitive status prevents them from taking the traditional paths of

institutionalization. They are nomadic and distributed by design; they are _ad

hoc_ and tactical, pseudonymous and conspiratory, relying on resources reduced

to the absolute minimum so they can survive under extremely hostile

circumstances.

Traditional collections of knowledge and artifacts, in their repurposed or

purpose-built palaces, are both the products and the embodiments of the wealth

and power that created them. Pirate libraries don’t have all the symbols of

transubstantiated might, the buildings, or all the marble, but as

institutions, they are as powerful as their more established counterparts.

Unlike the latter, whose claim to power was the fact of ownership and the

control over access and interpretation, pirates’ power is rooted in the

opposite: in their ability to make ownership irrelevant, access universal, and

interpretation democratic.

This is the paradox of the total piratical archive: they collect enormous

wealth, but they do not own or control any of it. As an insurance policy

against copyright enforcement, they have already given everything away: they

release their source code, their databases, and their catalogs; they put up

the metadata and the digitalized files on file-sharing networks. They realize

that exclusive ownership/control over any aspects of the library could be a

point of failure, so in the best traditions of archiving, they make sure

everything is duplicated and redundant, and that many of the copies are under

completely independent control. If we disregard for a moment the blatantly

illegal nature of these collections, this systematic detachment from the

concept of ownership and control is the most radical development in the way we

think about building and maintaining collections (Bodó 2015).

Because pirate libraries don’t own anything, they have nothing to lose. Pirate

librarians, on the other hand, are putting everything they have on the line.

Speaking truth to power has a potentially devastating price. Swartz was caught

when he broke into an MIT storeroom to download the articles in the JSTOR

database.[3] Facing a 35-year prison sentence and $1 million in fines, he

committed suicide.[4] By explaining her motives in a recent court filing,[5]

Elbakyan admitted responsibility and probably sealed her own legal and

financial fate. But her library is probably safe. In the wake of this lawsuit,

pirate libraries are busy securing themselves: pirates are shutting down

servers whose domain names were confiscated and archiving databases, again and

again, spreading the illicit collections through the underground networks

while setting up new servers. It may be easy to destroy individual

collections, but nothing in history has been able to destroy the idea of the

universal library, open for all.

For the better part of that history, the idea was simply impossible. Today it

is simply illegal. But in an era when books are everywhere, the total archive

is already here. Distributed among millions of hard drives, it already is a

_de facto_ common heritage. We are as gods, and might as well get good at

it.[6]

## About the author

**Bodo Balazs,** PhD, is an economist and piracy researcher at the Institute

for Information Law (IViR) at the University of Amsterdam. [More

»](https://limn.it/researchers/bodo/)

## Footnotes

[1] On such fora, one can ask for and receive otherwise out-of-reach

publications through various reddit groups such as

[r/Scholar](https://www.reddit.com/r/Scholar) and using certain Twitter

hashtags like #icanhazpdf or #pdftribute.

[2] Elsevier Inc. et al v. Sci-Hub et al, New York Southern District Court,

Case No. 1:15-cv-04282-RWS

[3] While we do not know what his aim was with the article dump, the

prosecution thought his Manifesto contained the motives for his act.

[4] See _United States of America v. Aaron Swartz_ , United States District

Court for the District of Massachusetts, Case No. 1:11-cr-10260

[5] Case 1:15-cv-04282-RWS Document 50 Filed 09/15/15, available at

[link](https://www.unitedstatescourts.org/federal/nysd/442951/).

[6] I of course stole this line from Stewart Brand (1968), the editor of the

Whole Earth catalog, who, in return, claims to have been stolen it from the

British anthropologist Edmund Leach. See

[here](http://www.wholeearth.com/issue/1010/article/195/we.are.as.gods) for

the details.

## Bibliography

BBC News. “British Museum ‘Guarding’ Object Looted from Syria. _BBC News,_

June 5. Available at [link](http://www.bbc.com/news/entertainment-

arts-33020199).

Bodó, B. 2015. “Libraries in the Post-Scarcity Era.” In _Copyrighting

Creativity_ , edited by H. Porsdam (pp. 75–92). Aldershot, UK: Ashgate.

———. 2014a. “In the Shadow of the Gigapedia: The Analysis of Supply and Demand

for the Biggest Pirate Library on Earth.” In _Shadow Libraries_ , edited by J.

Karaganis (forthcoming). New York: American Assembly. Available at

[link](http://ssrn.com/abstract=2616633).

———. 2014b. “A Short History of the Russian Digital Shadow Libraries.” In

Shadow Libraries, edited by J. Karaganis (forthcoming). New York: American

Assembly. Available at [link](http://ssrn.com/abstract=2616631).

Boyle, J. 2003. “The Second Enclosure Movement and the Construction of the

Public Domain.” _Law and Contemporary Problems_ 66:33–42. Available at

[link](http://dx.doi.org/10.2139/ssrn.470983).

Brand, S. 1968. _Whole Earth Catalog,_ Menlo Park, California: Portola

Institute.

Cabanac, G. 2015. “Bibliogifts in LibGen? A Study of a Text‐Sharing Platform

Driven by Biblioleaks and Crowdsourcing.” _Journal of the Association for

Information Science and Technology,_ Online First, 27 March 2015 _._

Fuller, M. 2011. “In the Paradise of Too Many Books: An Interview with Sean

Dockray.” _Metamute._ Available at

[link](http://www.metamute.org/editorial/articles/paradise-too-many-books-

interview-sean-dockray).

Interview with Dusan Barok. 2013. _Neural_ 10–11.

Liang, L. 2012. “Shadow Libraries.” _e-flux._ Available at

[link](http://www.e-flux.com/journal/shadow-libraries/).

Mars, M., and Medak, T. 2015. “The System of a Takedown: Control and De-

commodification in the Circuits of Academic Publishing.” Unpublished

manuscript.

Shabi, R. 2015. “Looted in Syria–and Sold in London: The British Antiques

Shops Dealing in Artefacts Smuggled by ISIS.” _The Guardian,_ July 3.

Available at [link](http://www.theguardian.com/world/2015/jul/03/antiquities-

looted-by-isis-end-up-in-london-shops).

Sollfrank, C. 2013. “Giving What You Don’t Have: Interviews with Sean Dockray

and Dmytri Kleiner.” _Culture Machine_ 14:1–3.

Swartz, A. 2008. “Guerilla Open Access Manifesto.” Available at

[link](https://archive.org/stream/GuerillaOpenAccessManifesto/Goamjuly2008_djvu.txt).

Barok

Communing Texts

2014

Communing Texts

_A talk given on the second day of the conference_ [Off the

Press](http://digitalpublishingtoolkit.org/22-23-may-2014/program/) _held at

WORM, Rotterdam, on May 23, 2014. Also available

in[PDF](/images/2/28/Barok_2014_Communing_Texts.pdf "Barok 2014 Communing

Texts.pdf")._

I am going to talk about publishing in the humanities, including scanning

culture, and its unrealised potentials online. For this I will treat the

internet not only as a platform for storage and distribution but also as a

medium with its own specific means for reading and writing, and consider the

relevance of plain text and its various rendering formats, such as HTML, XML,

markdown, wikitext and TeX.

One of the main reasons why books today are downloaded and bookmarked but

hardly read is the fact that they may contain something relevant but they

begin at the beginning and end at the end; or at least we are used to treat

them in this way. E-book readers and browsers are equipped with fulltext

search functionality but the search for "how does the internet change the way

we read" doesn't yield anything interesting but the diversion of attention.

Whilst there are dozens of books written on this issue. When being insistent,

one easily ends up with a folder with dozens of other books, stucked with how

to read them. There is a plethora of books online, yet there are indeed mostly

machines reading them.

It is surely tempting to celebrate or to despise the age of artificial

intelligence, flat ontology and narrowing down the differences between humans

and machines, and to write books as if only for machines or return to the

analogue, but we may as well look back and reconsider the beauty of simple

linear reading of the age of print, not for nostalgia but for what we can

learn from it.

This perspective implies treating texts in their context, and particularly in

the way they commute, how they are brought in relations with one another, into

a community, by the mere act of writing, through a technique that have

developed over time into what we have came to call _referencing_. While in the

early days referring to texts was practised simply as verbal description of a

referred writing, over millenia it evolved into a technique with standardised

practices and styles, and accordingly: it gained _precision_. This precision

is however nothing machinic, since referring to particular passages in other

texts instead of texts as wholes is an act of comradeship because it spares

the reader time when locating the passage. It also makes apparent that it is

through contexts that the web of printed books has been woven. But even though

referencing in its precision has been meant to be very concrete, particularly

the advent of the web made apparent that it is instead _virtual_. And for the

reader, laborous to follow. The web has shown and taught us that a reference

from one document to another can be plastic. To follow a reference from a

printed book the reader has to stand up, walk down the street to a library,

pick up the referred volume, flip through its pages until the referred one is

found and then follow the text until the passage most probably implied in the

text is identified, while on the web the reader, _ideally_ , merely moves her

finger a few milimeters. To click or tap; the difference between the long way

and the short way is obviously the hyperlink. Of course, in the absence of the

short way, even scholars are used to follow the reference the long way only as

an exception: there was established an unwritten rule to write for readers who

are familiar with literature in the respective field (what in turn reproduces

disciplinarity of the reader and writer), while in the case of unfamiliarity

with referred passage the reader inducts its content by interpreting its

interpretation of the writer. The beauty of reading across references was

never fully realised. But now our question is, can we be so certain that this

practice is still necessary today?

The web silently brought about a way to _implement_ the plasticity of this

pointing although it has not been realised as the legacy of referencing as we

know it from print. Today, when linking a text and having a particular passage

in mind, and even describing it in detail, the majority of links physically

point merely to the beginning of the text. Hyperlinks are linking documents as

wholes by default and the use of anchors in texts has been hardly thought of

as a _requirement_ to enable precise linking.

If we look at popular online journalism and its use of hyperlinks within the

text body we may claim that rarely someone can afford to read all those linked

articles, not even talking about hundreds of pages long reports and the like

and if something is wrong, it would get corrected via comments anyway. On the

internet, the writer is meant to be in more immediate feedback with the

reader. But not always readers are keen to comment and not always they are

allowed to. We may be easily driven to forget that quoting half of the

sentence is never quoting a full sentence, and if there ought to be the entire

quote, its source text in its whole length would need to be quoted. Think of

the quote _information wants to be free_ , which is rarely quoted with its

wider context taken into account. Even factoids, numbers, can be carbon-quoted

but if taken out of the context their meaning can be shaped significantly. The

reason for aversion to follow a reference may well be that we are usually

pointed to begin reading another text from its beginning.

While this is exactly where the practices of linking as on the web and

referencing as in scholarly work may benefit from one another. The question is

_how_ to bring them closer together.

An approach I am going to propose requires a conceptual leap to something we

have not been taught.

For centuries, the primary format of the text has been the page, a vessel, a

medium, a frame containing text embedded between straight, less or more

explicit, horizontal and vertical borders. Even before the material of the

page such as papyrus and paper appeared, the text was already contained in

lines and columns, a structure which we have learnt to perceive as a grid. The

idea of the grid allows us to view text as being structured in lines and

pages, that are in turn in hand if something is to be referred to. Pages are

counted as the distance from the beginning of the book, and lines as the

distance from the beginning of the page. It is not surprising because it is in

accord with inherent quality of its material medium -- a sheet of paper has a

shape which in turn shapes a body of a text. This tradition goes as far as to

the Ancient times and the bookroll in which we indeed find textual grids.

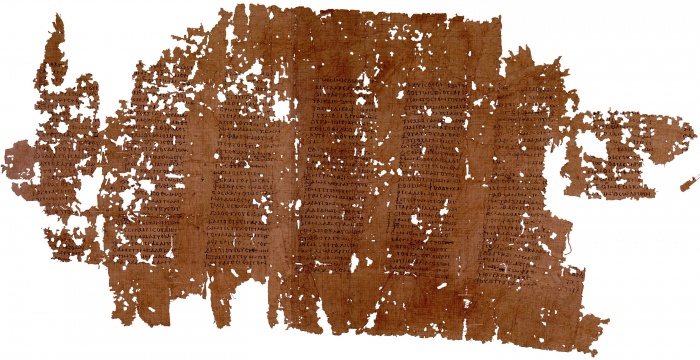

[](/File:Papyrus_of_Plato_Phaedrus.jpg)

A crucial difference between print and digital is that text files such as HTML

documents nor markdown documents nor database-driven texts did inherit this

quality. Their containers are simply not structured into pages, precisely

because of the nature of their materiality as media. Files are written on

memory drives in scattered chunks, beginning at point A and ending at point B

of a drive, continuing from C until D, and so on. Where does each of these

chunks start is ultimately independent from what it contains.

Forensic archaeologists would confirm that when a portion of a text survives,

in the case of ASCII documents it is not a page here and page there, or the

first half of the book, but textual blocks from completely arbitrary places of

the document.

This may sound unrelated to how we, humans, structure our writing in HTML

documents, emails, Office documents, even computer code, but it is a reminder

that we structure them for habitual (interfaces are rectangular) and cultural

(human-readability) reasons rather then for a technical necessity that would

stem from material properties of the medium. This distinction is apparent for

example in HTML, XML, wikitext and TeX documents with their content being both

stored on the physical drive and treated when rendered for reading interfaces

as single flow of text, and the same goes for other texts when treated with

automatic line-break setting turned off. Because line-breaks and spaces and

everything else is merely a number corresponding to a symbol in character set.

So how to address a section in this kind of document? An option offers itself

-- how computers do, or rather how we made them do it -- as a position of the

beginning of the section in the array, in one long line. It would mean to

treat the text document not in its grid-like format but as line, which merely

adapts to properties of its display when rendered. As it is nicely implied in

the animated logo of this event and as we know it from EPUBs for example.

In the case of 'reference-linking' we can refer to a passage by including the

information about its beginning and length determined by the character

position within the text (in analogy to _pp._ operator used for printed

publications) as well as the text version information (in printed texts served

by edition and date of publication). So what is common in printed text as the

page information is here replaced by the character position range and version.

Such a reference-link is more precise while addressing particular section of a

particular version of a document regardless of how it is rendered on an

interface.

It is a relatively simple idea and its implementation does not be seem to be

very hard, although I wonder why it has not been implemented already. I

discussed it with several people yesterday to find out there were indeed

already attempts in this direction. Adam Hyde pointed me to a proposal for

_fuzzy anchors_ presented on the blog of the Hypothes.is initiative last year,

which in order to overcome the need for versioning employs diff algorithms to

locate the referred section, although it is too complicated to be explained in

this setting.[1] Aaaarg has recently implemented in its PDF reader an option

to generate URLs for a particular point in the scanned document which itself

is a great improvement although it treats texts as images, thus being specific

to a particular scan of a book, and generated links are not public URLs.

Using the character position in references requires an agreement on how to

count. There are at least two options. One is to include all source code in

positioning, which means measuring the distance from the anchor such as the

beginning of the text, the beginning of the chapter, or the beginning of the

paragraph. The second option is to make a distinction between operators and

operands, and count only in operands. Here there are further options where to

make the line between them. We can consider as operands only characters with

phonetic properties -- letters, numbers and symbols, stripping the text from

operators that are there to shape sonic and visual rendering of the text such

as whitespaces, commas, periods, HTML and markdown and other tags so that we

are left with the body of the text to count in. This would mean to render

operators unreferrable and count as in _scriptio continua_.

_Scriptio continua_ is a very old example of the linear onedimensional

treatment of the text. Let's look again at the bookroll with Plato's writing.

Even though it is 'designed' into grids on a closer look it reveals the lack

of any other structural elements -- there are no spaces, commas, periods or

line-breaks, the text is merely one flow, one long line.

_Phaedrus_ was written in the fourth century BC (this copy comes from the

second century AD). Word and paragraph separators were reintroduced much

later, between the second and sixth century AD when rolls were gradually

transcribed into codices that were bound as pages and numbered (a dramatic

change in publishing comparable to digital changes today).[2]

'Reference-linking' has not been prominent in discussions about sharing books

online and I only came to realise its significance during my preparations for

this event. There is a tremendous amount of very old, recent and new texts

online but we haven't done much in opening them up to contextual reading. In

this there are publishers of all 'grounds' together.

We are equipped to treat the internet not only as repository and library but

to take into account its potentials of reading that have been hiding in front

of our very eyes. To expand the notion of hyperlink by taking into account

techniques of referencing and to expand the notion of referencing by realising

its plasticity which has always been imagined as if it is there. To mesh texts

with public URLs to enable entaglement of referencing and hyperlinks. Here,

open access gains its further relevance and importance.

Dušan Barok

_Written May 21-23, 2014, in Vienna and Rotterdam. Revised May 28, 2014._

Notes

1. ↑ Proposals for paragraph-based hyperlinking can be traced back to the work of Douglas Engelbart, and today there is a number of related ideas, some of which were implemented on a small scale: fuzzy anchoring, 1(http://hypothes.is/blog/fuzzy-anchoring/); purple numbers, 2(http://project.cim3.net/wiki/PMWX_White_Paper_2008); robust anchors, 3(http://github.com/hypothesis/h/wiki/robust-anchors); _Emphasis_ , 4(http://open.blogs.nytimes.com/2011/01/11/emphasis-update-and-source); and others 5(http://en.wikipedia.org/wiki/Fragment_identifier#Proposals). The dependence on structural elements such as paragraphs is one of their shortcoming making them not suitable for texts with longer paragraphs (e.g. Adorno's _Aesthetic Theory_ ), visual poetry or computer code; another is the requirement to store anchors along the text.

2. ↑ Works which happened not to be of interest at the time ceased to be copied and mostly disappeared. On the book roll and its gradual replacement by the codex see William A. Johnson, "The Ancient Book", in _The Oxford Handbook of Papyrology_ , ed. Roger S. Bagnall, Oxford, 2009, pp 256-281, 6(http://google.com/books?id=6GRcLuc124oC&pg=PA256).

Addendum (June 9)

Arie Altena wrote a [report from the

panel](http://digitalpublishingtoolkit.org/2014/05/off-the-press-report-day-

ii/) published on the website of Digital Publishing Toolkit initiative,

followed by another [summary of the

talk](http://digitalpublishingtoolkit.org/2014/05/dusan-barok-digital-imprint-

the-motion-of-publishing/) by Irina Enache.

The online repository Aaaaarg [has

introduced](http://twitter.com/aaaarg/status/474717492808413184) the

reference-link function in its document viewer, see [an

example](http://aaaaarg.fail/ref/60090008362c07ed5a312cda7d26ecb8#0.102).