Giorgetta, Nicoletti & Adema

A Conversation on Digital Archiving Practices

2015

# A Conversation on Digital Archiving Practices

A couple of months ago Davide Giorgetta and Valerio Nicoletti (both ISIA

Urbino) did an interview with me for their MA in Design of Publishing. Silvio

Lorusso, was so kind to publish the interview on the fantastic

[p-dpa.net](http://p-dpa.net/a-conversation-on-digital-archiving-practices-

with-janneke-adema/). I am reblogging it here.

* * *

[Davide Giorgetta](http://p-dpa.net/creator/davide-giorgetta/) and [Valerio

Nicoletti](http://p-dpa.net/creator/valerio-nicoletti/) are both students from

[ISIA Urbino](http://www.isiaurbino.net/home/), where they attend the Master

Course in Design for Publishing. They are currently investigating the

independent side of digital archiving practices within the scope of the

publishing world.

As part of their research, they asked some questions to Janneke Adema, who is

Research Fellow in Digital Media at Coventry University, with a PhD in Media

(Coventry University) and a background in History (MA) and Philosophy (MA)

(both University of Groningen) and Book and Digital Media Studies (MA) (Leiden

University). Janneke’s PhD thesis focuses on the future of the scholarly book

in the humanities. She has been conducting research for the

[OAPEN](http://project.oapen.org/index.php/about-oapen) project, and

subsequently the OAPEN foundation, from 2008 until 2013 (including research

for OAPEN-NL and DOAB). Her research for OAPEN focused on user needs and

publishing models concerning Open Access books in the Humanities and Social

Sciences.

**Davide Giorgetta & Valerio Nicoletti: Does a way out from the debate between

publishers and digital independent libraries (Monoskop Log, Ubuweb,

Aaaarg.org) exist, in terms of copyright? An alternative solution able to

solve the issue and to provide equal opportunities to everyone? Would the fear

of publishers of a possible reduction of incomes be legitimized if the access

to their digital publications was open and free?**

Janneke Adema: This is an interesting question, since for many academics this

‘way out’ (at least in so far it concerns scholarly publications) has been

envisioned in or through the open access movement and the use of Creative

Commons licenses. However, the open access movement, a rather plural and

loosely defined group of people, institutions and networks, in its more

moderate instantiations tends to distance itself from piracy and copyright

infringement or copy(far)left practices. Through its use of and favoring of

Creative Commons licenses one could even argue that it has been mainly

concerned with a reform of copyright rather than a radical critique of and

rethinking of the common and the right to copy (Cramer 2013, Hall

2014).1(http://p-dpa.net/a-conversation-on-digital-archiving-practices-

with-janneke-adema/#fn:1 "see footnote") Nonetheless, in its more radical

guises open access can be more closely aligned with the practices associated

with digital pirate libraries such as the ones listed above, for instance

through Aaron Swartz’s notion of [Guerilla Open

Access](https://archive.org/stream/GuerillaOpenAccessManifesto/Goamjuly2008_djvu.txt):

> We need to take information, wherever it is stored, make our copies and

share them with the world. We need to take stuff that’s out of copyright and

add it to the archive. We need to buy secret databases and put them on the

Web. We need to download scientific journals and upload them to file sharing

networks. We need to fight for Guerilla Open Access. (Swartz 2008)

However whatever form or vision of open access you prefer, I do not think it

is a ‘solution’ to any problem—such as copyright/fight—, but I would rather

see it, as I have written

[elsewhere](http://blogs.lse.ac.uk/impactofsocialsciences/2014/11/18

/embracing-messiness-adema-pdsc14/), ‘as an ongoing processual and critical

engagement with changes in the publishing system, in our scholarly

communication practices and in our media and technologies of communication.’

And in this sense open access practices offer us the possibility to critically

reflect upon the politics of knowledge production, including copyright and

piracy, openness and the commons, indeed, even upon the nature of the book

itself.

With respect to the second part of your question, again, where it concerns

scholarly books, [research by Ronald

Snijder](https://scholar.google.com/citations?view_op=view_citation&hl=en&user=PuDczakAAAAJ&citation_for_view=PuDczakAAAAJ:u-x6o8ySG0sC)

shows no decline in sales or income for publishers once they release their

scholarly books in open access. The open availability does however lead to

more discovery and online consultation, meaning that it actually might lead to

more ‘impact’ for scholarly books (Snijder 2010).

**DG, VN: In which way, if any, are digital archiving practices stimulating

new publishing phenomenons? Are there any innovative outcomes, apart the

obvious relation to p.o.d. tools? (or interesting new projects in this

field)**

JA: Beyond extending access, I am mostly interested in how digital archiving

practices have the potential to stimulate the following practices or phenomena

(which in no way are specific to digital archiving or publishing practices, as

they have always been a potential part of print publications too): reuse and

remix; processual research and iterative publishing; and collaborative forms

of knowledge production. These practices interest me mainly as they have the

potential to critique the way the (printed) book has been commodified and

essentialised over the centuries, in a bound, linear and fixed format, a

practice which is currently being replicated in a digital context. Indeed, the

book has been fixed in this way both discursively and through a system of

material production within publishing and academia—which includes our

institutions and practices of scholarly communication—that prefers book

objects as quantifiable and auditable performance indicators and as marketable

commodities and objects of symbolic value exchange. The practices and

phenomena mentioned above, i.e. remix, versioning and collaboration, have the

potential to help us to reimagine the bound nature of the book and to explore

both a spatial and temporal critique of the book as a fixed object; they can

aid us to examine and experiment with various different incisions that can be

made in our scholarship as part of the informal and formal publishing and

communication of our research that goes beyond the final research commodity.

In this sense I am interested in how these specific digital archiving,

research and publishing practices offer us the possibility to imagine a

different, perhaps more ethical humanities, a humanities that is processual,

contingent, unbound and unfinished. How can these practices aid us in how to

cut well in the ongoing unfolding of our research, how can they help us

explore how to make potentially better interventions? How can we take

responsibility as scholars for our entangled becoming with our research and

publications? (Barad 2007, Kember and Zylinska 2012)

Examples that I find interesting in the realm of the humanities in this

respect include projects that experiment with such a critique of our fixed,

print-based practices and institutions in an affirmative way: for example Mark

Amerika’s [remixthebook](http://www.remixthebook.com/) project; Open

Humanities’ [Living Books about Life](http://www.livingbooksaboutlife.org/)

series; projects such as

[Vectors](http://vectors.usc.edu/issues/index.php?issue=7) and

[Scalar](http://scalar.usc.edu/); and collaborative knowledge production,

archiving and creation projects, from wiki-based research projects to AAAARG.

**DG, VN: In which way does a digital container influence its content? Does

the same book — if archived on different platforms, such as _Internet Archive_

, _The Pirate Bay_ , _Monoskop Log_ — still remain the same cultural item?**

JA: In short my answer to this question would be ‘no’. Books are embodied

entities, which are materially established through their specific affordances

in relationship to their production, dissemination, reception and

preservation. This means that the specific materiality of the (digital) book

is partly an outcome of these ongoing processes. Katherine Hayles has argued

in this respect that materiality is an emergent property:

> In this view of materiality, it is not merely an inert collection of

physical properties but a dynamic quality that emerges from the interplay

between the text as a physical artifact, its conceptual content, and the

interpretive activities of readers and writers. Materiality thus cannot be

specified in advance; rather, it occupies a borderland— or better, performs as

connective tissue—joining the physical and mental, the artifact and the user.

(2004: 72)

Similarly, Matthew Kirschenbaum points out that the preservation of digital

objects is:

> _logically inseparable_ from the act of their creation’ (…) ‘The lag between

creation and preservation collapses completely, since a digital object may

only ever be said to be preserved _if_ it is accessible, and each individual

access creates the object anew. One can, in a very literal sense, _never_

access the “same” electronic file twice, since each and every access

constitutes a distinct instance of the file that will be addressed and stored

in a unique location in computer memory. (Kirschenbaum 2013)

Every time we access a digital object, we thus duplicate it, we copy it and we

instantiate it. And this is exactly why, in our strategies of conservation,

every time we access a file we also (re)create these objects anew over and

over again. The agency of the archive, of the software and hardware, are also

apparent here, where archives are themselves ‘active ‘‘archaeologists’’ of

knowledge’ (Ernst 2011: 239) and, as Kirschenbaum puts it, ‘the archive writes

itself’ (2013).

In this sense a book can be seen as an apparatus, consisting of an

entanglement of relationships between, among other things, authors, books, the

outside world, readers, the material production and political economy of book

publishing, its preservation and material instantiations, and the discursive

formation of scholarship. Books as apparatuses are thus reality shaping, they

are performative. This relates to Johanna Drucker’s notion of ‘performative

materiality’, where Drucker argues for an extension of what a book _is_ (i.e.

from a focus on its specific properties and affordances), to what a book

_does_ : ‘Performative materiality suggests that what something _is_ has to be

understood in terms of what it _does_ , how it works within machinic,

systemic, and cultural domains.’ For, as Drucker argues, ‘no matter how

detailed a description of material substrates or systems we have, their use is

performative whether this is a reading by an individual, the processing of

code, the transmission of signals through a system, the viewing of a film,

performance of a play, or a musical work and so on. Material conditions

provide an inscriptional base, a score, a point of departure, a provocation,

from which a work is produced as an event’ (Drucker 2013).

So, to come back to your question, these specific digital platforms (Monoskop,

The Pirate Bay etc.) become integral aspects of the apparatus of the book and

each in their own different way participates in the performance and

instantiation of the books in their archives. Not only does a digital book

therefore differ as a material or cultural object from a printed book, a

digital object also has materially distinct properties related to the platform

on which it is made available. Indeed, building further on the theories

described above, a book is a different object every time it is instantiated or

read, be it by a human or machinic entity; they become part of the apparatus

of the book, a performative apparatus. Therefore, as Silvio Lorusso has

stated:

**DG, VN: In your opinion, can scholarly publishing, in particular self-

archiving practices, constitute a bridge covering the gap between authors and

users in terms of access to knowledge? Could we hope that these practices will

find a broader use, moving from very specific fields (academic papers) to book

publishing in general?**

JA: On the one hand, yes. Self-archiving, or the ‘green road’ to open access,

offers a way for academics to make their research available in a preprint form

via open access repositories in a relatively simple and straightforward way,

making it easily accessible to other academics and more general audiences.

However, it can be argued that as a strategy, the green road doesn’t seem to

be very subversive, where it doesn’t actively rethink, re-imagine, or

experiment with the system of scholarly knowledge production in a more

substantial way, including peer-review and the print-based publication forms

this system continues to promote. With its emphasis on achieving universal,

free, online access to research, a rigorous critical exploration of the form

of the book itself doesn’t seem to be a main priority of green open access

activists. Stevan Harnad, one of the main proponents of green open access and

self-archiving has for instance stated that ‘it’s time to stop letting the

best get in the way of the better: Let’s forget about Libre and Gold OA until

we have managed to mandate Green Gratis OA universally’ (Harnad 2012). This is

where the self-archiving strategy in its current implementation falls short I

think with respect to the ‘breaking-down’ of barriers between authors and

users, where it isn’t necessarily committed to following a libre open access

strategy, which, one could argue, would be more open to adopting and promoting

forms of open access that are designed to make material available for others

to (re) use, copy, reproduce, distribute, transmit, translate, modify, remix

and build upon? Surely this would be a more substantial strategy to bridge the

gap between authors and users with respect to the production, dissemination

and consumption of knowledge?

With respect to the second part of your question, could these practices find a

broader use? I am not sure, mainly because of the specific characteristics of

academia and scholarly publishing, where scholars are directly employed and

paid by their institutions for the research work they do. Hence, self-

archiving this work would not directly lead to any or much loss of income for

academics. In other fields, such as literary publishing for example, this

issue of remuneration can become quite urgent however, even though many [free

culture](https://en.wikipedia.org/wiki/Free_culture_movement) activists (such

as Lawrence Lessig and Cory Doctorow) have argued that freely sharing cultural

goods online, or even self-publishing, doesn’t necessarily need to lead to any

loss of income for cultural producers. So in this respect I don’t think we can

lift something like open access self-archiving out of its specific context and

apply it to other contexts all that easily, although we should certainly

experiment with this of course in different domains of digital culture.

**DG, VN: After your answers, we would also receive suggestions from you. Do

you notice any unresolved or raising questions in the contemporary context of

digital archiving practices and their relation to the publishing realm?**

JA: So many :). Just to name a few: the politics of search and filtering

related to information overload; the ethics and politics of publishing in

relationship to when, where, how and why we decide to publish our research,

for what reasons and with what underlying motivations; the continued text- and

object-based focus of our archiving and publishing practices and platforms,

where there is a lack of space to publish and develop more multimodal,

iterative, diagrammatic and speculative forms of scholarship; issues of free

labor and the problem or remuneration of intellectual labor in sharing

economies etc.

**Bibliography**

* Adema, J. (2014) ‘Embracing Messiness’. [17 November 2014] available from [17 November 2014]

* Adema, J. and Hall, G. (2013) ‘The Political Nature of the Book: On Artists’ Books and Radical Open Access’. _New Formations_ 78 (1), 138–156

* Barad, K. (2007) _Meeting the Universe Halfway: Quantum Physics and the Entanglement of Matter and Meaning_. Duke University Press

* Cramer, F. (2013) _Anti-Media: Ephemera on Speculative Arts_. Rotterdam : New York, NY: nai010 publishers

* Drucker, J. (2013) _Performative Materiality and Theoretical Approaches to Interface_. [online] 7 (1). available from [4 April 2014]

* Ernst, W. (2011) ‘Media Archaeography: Method and Machine versus History and Narrative of Media’. in _Media Archaeology: Approaches, Applications, and Implications_. ed. by Huhtamo, E. and Parikka, J. University of California Press

* Hall, G. (2014) ‘Copyfight’. in _Critical Keywords for the Digital Humanities_ , [online] Lueneburg: Centre for Digital Cultures (CDC). available from [5 December 2014]

* Harnad, S. (2012) ‘Open Access: Gratis and Libre’. [3 May 2012] available from [4 March 2014]

* Hayles, N.K. (2004) ‘Print Is Flat, Code Is Deep: The Importance of Media-Specific Analysis’. _Poetics Today_ 25 (1), 67–90

* Kember, S. and Zylinska, J. (2012) _Life After New Media: Mediation as a Vital Process_. MIT Press

* Kirschenbaum, M. (2013) ‘The .txtual Condition: Digital Humanities, Born-Digital Archives, and the Future Literary’. _DHQ: Digital Humanities Quarterly_ [online] 7 (1). available from [20 July 2014]

* Lorusso, S. (2014) _The Post-Digital Publishing Archive: An Inventory of Speculative Strategies_. in ‘The Aesthetics of the Humanities: Towards a Poetic Knowledge Production’ [online] held 11 June 2014 at Coventry University. available from [31 May 2015]

* Snijder, R. (2010) ‘The Profits of Free Books: An Experiment to Measure the Impact of Open Access Publishing’. _Learned Publishing_ 23 (4), 293–301

* Swartz, A. (2008) _Guerilla Open Access Manifesto_ [online] available from [31 May 2015]

Murtaugh

A bag but is language nothing of words

2016

In text indexing and other machine reading applications the term "bag of

words" is frequently used to underscore how processing algorithms often

represent text using a data structure (word histograms or weighted vectors)

where the original order of the words in sentence form is stripped away. While

"bag of words" might well serve as a cautionary reminder to programmers of the

essential violence perpetrated to a text and a call to critically question the

efficacy of methods based on subsequent transformations, the expression's use

seems in practice more like a badge of pride or a schoolyard taunt that would

go: Hey language: you're nothin' but a big BAG-OF-WORDS.

## Bag of words

In information retrieval and other so-called _machine-reading_ applications

(such as text indexing for web search engines) the term "bag of words" is used

to underscore how in the course of processing a text the original order of the

words in sentence form is stripped away. The resulting representation is then

a collection of each unique word used in the text, typically weighted by the

number of times the word occurs.

Bag of words, also known as word histograms or weighted term vectors, are a

standard part of the data engineer's toolkit. But why such a drastic

transformation? The utility of "bag of words" is in how it makes text amenable

to code, first in that it's very straightforward to implement the translation

from a text document to a bag of words representation. More significantly,

this transformation then opens up a wide collection of tools and techniques

for further transformation and analysis purposes. For instance, a number of

libraries available in the booming field of "data sciences" work with "high

dimension" vectors; bag of words is a way to transform a written document into

a mathematical vector where each "dimension" corresponds to the (relative)

quantity of each unique word. While physically unimaginable and abstract

(imagine each of Shakespeare's works as points in a 14 million dimensional

space), from a formal mathematical perspective, it's quite a comfortable idea,

and many complementary techniques (such as principle component analysis) exist

to reduce the resulting complexity.

What's striking about a bag of words representation, given is centrality in so

many text retrieval application is its irreversibility. Given a bag of words

representation of a text and faced with the task of producing the original

text would require in essence the "brain" of a writer to recompose sentences,

working with the patience of a devoted cryptogram puzzler to draw from the

precise stock of available words. While "bag of words" might well serve as a

cautionary reminder to programmers of the essential violence perpetrated to a

text and a call to critically question the efficacy of methods based on

subsequent transformations, the expressions use seems in practice more like a

badge of pride or a schoolyard taunt that would go: Hey language: you're

nothing but a big BAG-OF-WORDS. Following this spirit of the term, "bag of

words" celebrates a perfunctory step of "breaking" a text into a purer form

amenable to computation, to stripping language of its silly redundant

repetitions and foolishly contrived stylistic phrasings to reveal a purer

inner essence.

## Book of words

Lieber's Standard Telegraphic Code, first published in 1896 and republished in

various updated editions through the early 1900s, is an example of one of

several competing systems of telegraph code books. The idea was for both

senders and receivers of telegraph messages to use the books to translate

their messages into a sequence of code words which can then be sent for less

money as telegraph messages were paid by the word. In the front of the book, a

list of examples gives a sampling of how messages like: "Have bought for your

account 400 bales of cotton, March delivery, at 8.34" can be conveyed by a

telegram with the message "Ciotola, Delaboravi". In each case the reduction of

number of transmitted words is highlighted to underscore the efficacy of the

method. Like a dictionary or thesaurus, the book is primarily organized around

key words, such as _act_ , _advice_ , _affairs_ , _bags_ , _bail_ , and

_bales_ , under which exhaustive lists of useful phrases involving the

corresponding word are provided in the main pages of the volume. [1]

> [...] my focus in this chapter is on the inscription technology that grew

parasitically alongside the monopolistic pricing strategies of telegraph

companies: telegraph code books. Constructed under the bywords “economy,”

“secrecy,” and “simplicity,” telegraph code books matched phrases and words

with code letters or numbers. The idea was to use a single code word instead

of an entire phrase, thus saving money by serving as an information

compression technology. Generally economy won out over secrecy, but in

specialized cases, secrecy was also important.[2]

In Katherine Hayles' chapter devoted to telegraph code books she observes how:

> The interaction between code and language shows a steady movement away from

a human-centric view of code toward a machine-centric view, thus anticipating

the development of full-fledged machine codes with the digital computer. [3]

[](/wiki/index.php?title=File:Liebers_P1016851.JPG)

Aspects of this transitional moment are apparent in a notice included

prominently inserted in the Lieber's code book:

> After July, 1904, all combinations of letters that do not exceed ten will

pass as one cipher word, provided that it is pronounceable, or that it is

taken from the following languages: English, French, German, Dutch, Spanish,

Portuguese or Latin -- International Telegraphic Conference, July 1903 [4]

Conforming to international conventions regulating telegraph communication at

that time, the stipulation that code words be actual words drawn from a

variety of European languages (many of Lieber's code words are indeed

arbitrary Dutch, German, and Spanish words) underscores this particular moment

of transition as reference to the human body in the form of "pronounceable"

speech from representative languages begins to yield to the inherent potential

for arbitrariness in digital representation.

What telegraph code books do is remind us of is the relation of language in

general to economy. Whether they may be economies of memory, attention, costs

paid to a telecommunicatons company, or in terms of computer processing time

or storage space, encoding language or knowledge in any form of writing is a

form of shorthand and always involves an interplay with what one expects to

perform or "get out" of the resulting encoding.

> Along with the invention of telegraphic codes comes a paradox that John

Guillory has noted: code can be used both to clarify and occlude. Among the

sedimented structures in the technological unconscious is the dream of a

universal language. Uniting the world in networks of communication that

flashed faster than ever before, telegraphy was particularly suited to the

idea that intercultural communication could become almost effortless. In this

utopian vision, the effects of continuous reciprocal causality expand to

global proportions capable of radically transforming the conditions of human

life. That these dreams were never realized seems, in retrospect, inevitable.

[5]

Far from providing a universal system of encoding messages in the English

language, Lieber's code is quite clearly designed for the particular needs and

conditions of its use. In addition to the phrases ordered by keywords, the

book includes a number of tables of terms for specialized use. One table lists

a set of words used to describe all possible permutations of numeric grades of

coffee (Choliam = 3,4, Choliambos = 3,4,5, Choliba = 4,5, etc.); another table

lists pairs of code words to express the respective daily rise or fall of the

price of coffee at the port of Le Havre in increments of a quarter of a Franc

per 50 kilos ("Chirriado = prices have advanced 1 1/4 francs"). From an

archaeological perspective, the Lieber's code book reveals a cross section of

the needs and desires of early 20th century business communication between the

United States and its trading partners.

The advertisements lining the Liebers Code book further situate its use and

that of commercial telegraphy. Among the many advertisements for banking and

law services, office equipment, and alcohol are several ads for gun powder and

explosives, drilling equipment and metallurgic services all with specific

applications to mining. Extending telegraphy's formative role for ship-to-

shore and ship-to-ship communication for reasons of safety, commercial

telegraphy extended this network of communication to include those parties

coordinating the "raw materials" being mined, grown, or otherwise extracted

from overseas sources and shipped back for sale.

## "Raw data now!"

From [La ville intelligente - Ville de la connaissance](/wiki/index.php?title

=La_ville_intelligente_-_Ville_de_la_connaissance "La ville intelligente -

Ville de la connaissance"):

Étant donné que les nouvelles formes modernistes et l'utilisation de matériaux

propageaient l'abondance d'éléments décoratifs, Paul Otlet croyait en la

possibilité du langage comme modèle de « [données

brutes](/wiki/index.php?title=Bag_of_words "Bag of words") », le réduisant aux

informations essentielles et aux faits sans ambiguïté, tout en se débarrassant

de tous les éléments inefficaces et subjectifs.

From [The Smart City - City of Knowledge](/wiki/index.php?title

=The_Smart_City_-_City_of_Knowledge "The Smart City - City of Knowledge"):

As new modernist forms and use of materials propagated the abundance of

decorative elements, Otlet believed in the possibility of language as a model

of '[raw data](/wiki/index.php?title=Bag_of_words "Bag of words")', reducing

it to essential information and unambiguous facts, while removing all

inefficient assets of ambiguity or subjectivity.

> Tim Berners-Lee: [...] Make a beautiful website, but first give us the

unadulterated data, we want the data. We want unadulterated data. OK, we have

to ask for raw data now. And I'm going to ask you to practice that, OK? Can

you say "raw"?

>

> Audience: Raw.

>

> Tim Berners-Lee: Can you say "data"?

>

> Audience: Data.

>

> TBL: Can you say "now"?

>

> Audience: Now!

>

> TBL: Alright, "raw data now"!

>

> [...]

>

> So, we're at the stage now where we have to do this -- the people who think

it's a great idea. And all the people -- and I think there's a lot of people

at TED who do things because -- even though there's not an immediate return on

the investment because it will only really pay off when everybody else has

done it -- they'll do it because they're the sort of person who just does

things which would be good if everybody else did them. OK, so it's called

linked data. I want you to make it. I want you to demand it. [6]

## Un/Structured

As graduate students at Stanford, Sergey Brin and Lawrence (Larry) Page had an

early interest in producing "structured data" from the "unstructured" web. [7]

> The World Wide Web provides a vast source of information of almost all

types, ranging from DNA databases to resumes to lists of favorite restaurants.

However, this information is often scattered among many web servers and hosts,

using many different formats. If these chunks of information could be

extracted from the World Wide Web and integrated into a structured form, they

would form an unprecedented source of information. It would include the

largest international directory of people, the largest and most diverse

databases of products, the greatest bibliography of academic works, and many

other useful resources. [...]

>

> **2.1 The Problem**

> Here we define our problem more formally:

> Let D be a large database of unstructured information such as the World

Wide Web [...] [8]

In a paper titled _Dynamic Data Mining_ Brin and Page situate their research

looking for _rules_ (statistical correlations) between words used in web

pages. The "baskets" they mention stem from the origins of "market basket"

techniques developed to find correlations between the items recorded in the

purchase receipts of supermarket customers. In their case, they deal with web

pages rather than shopping baskets, and words instead of purchases. In

transitioning to the much larger scale of the web, they describe the

usefulness of their research in terms of its computational economy, that is

the ability to tackle the scale of the web and still perform using

contemporary computing power completing its task in a reasonably short amount

of time.

> A traditional algorithm could not compute the large itemsets in the lifetime

of the universe. [...] Yet many data sets are difficult to mine because they

have many frequently occurring items, complex relationships between the items,

and a large number of items per basket. In this paper we experiment with word

usage in documents on the World Wide Web (see Section 4.2 for details about

this data set). This data set is fundamentally different from a supermarket

data set. Each document has roughly 150 distinct words on average, as compared

to roughly 10 items for cash register transactions. We restrict ourselves to a

subset of about 24 million documents from the web. This set of documents

contains over 14 million distinct words, with tens of thousands of them

occurring above a reasonable support threshold. Very many sets of these words

are highly correlated and occur often. [9]

## Un/Ordered

In programming, I've encountered a recurring "problem" that's quite

symptomatic. It goes something like this: you (the programmer) have managed to

cobble out a lovely "content management system" (either from scratch, or using

any number of helpful frameworks) where your user can enter some "items" into

a database, for instance to store bookmarks. After this ordered items are

automatically presented in list form (say on a web page). The author: It's

great, except... could this bookmark come before that one? The problem stems

from the fact that the database ordering (a core functionality provided by any

database) somehow applies a sorting logic that's almost but not quite right. A

typical example is the sorting of names where details (where to place a name

that starts with a Norwegian "Ø" for instance), are language-specific, and

when a mixture of languages occurs, no single ordering is necessarily

"correct". The (often) exascerbated programmer might hastily add an additional

database field so that each item can also have an "order" (perhaps in the form

of a date or some other kind of (alpha)numerical "sorting" value) to be used

to correctly order the resulting list. Now the author has a means, awkward and

indirect but workable, to control the order of the presented data on the start

page. But one might well ask, why not just edit the resulting listing as a

document? Not possible! Contemporary content management systems are based on a

data flow from a "pure" source of a database, through controlling code and

templates to produce a document as a result. The document isn't the data, it's

the end result of an irreversible process. This problem, in this and many

variants, is widespread and reveals an essential backwardness that a

particular "computer scientist" mindset relating to what constitutes "data"

and in particular it's relationship to order that makes what might be a

straightforward question of editing a document into an over-engineered

database.

Recently working with Nikolaos Vogiatzis whose research explores playful and

radically subjective alternatives to the list, Vogiatzis was struck by how

from the earliest specifications of HTML (still valid today) have separate

elements (OL and UL) for "ordered" and "unordered" lists.

> The representation of the list is not defined here, but a bulleted list for

unordered lists, and a sequence of numbered paragraphs for an ordered list

would be quite appropriate. Other possibilities for interactive display

include embedded scrollable browse panels. [10]

Vogiatzis' surprise lay in the idea of a list ever being considered

"unordered" (or in opposition to the language used in the specification, for

order to ever be considered "insignificant"). Indeed in its suggested

representation, still followed by modern web browsers, the only difference

between the two visually is that UL items are preceded by a bullet symbol,

while OL items are numbered.

The idea of ordering runs deep in programming practice where essentially

different data structures are employed depending on whether order is to be

maintained. The indexes of a "hash" table, for instance (also known as an

associative array), are ordered in an unpredictable way governed by a

representation's particular implementation. This data structure, extremely

prevalent in contemporary programming practice sacrifices order to offer other

kinds of efficiency (fast text-based retrieval for instance).

## Data mining

In announcing Google's impending data center in Mons, Belgian prime minister

Di Rupo invoked the link between the history of the mining industry in the

region and the present and future interest in "data mining" as practiced by IT

companies such as Google.

Whether speaking of bales of cotton, barrels of oil, or bags of words, what

links these subjects is the way in which the notion of "raw material" obscures

the labor and power structures employed to secure them. "Raw" is always

relative: "purity" depends on processes of "refinement" that typically carry

social/ecological impact.

Stripping language of order is an act of "disembodiment", detaching it from

the acts of writing and reading. The shift from (human) reading to machine

reading involves a shift of responsibility from the individual human body to

the obscured responsibilities and seemingly inevitable forces of the

"machine", be it the machine of a market or the machine of an algorithm.

From [X = Y](/wiki/index.php?title=X_%3D_Y "X = Y"):

Still, it is reassuring to know that the products hold traces of the work,

that even with the progressive removal of human signs in automated processes,

the workers' presence never disappears completely. This presence is proof of

the materiality of information production, and becomes a sign of the economies

and paradigms of efficiency and profitability that are involved.

The computer scientists' view of textual content as "unstructured", be it in a

webpage or the OCR scanned pages of a book, reflect a negligence to the

processes and labor of writing, editing, design, layout, typesetting, and

eventually publishing, collecting and cataloging [11].

"Unstructured" to the computer scientist, means non-conformant to particular

forms of machine reading. "Structuring" then is a social process by which

particular (additional) conventions are agreed upon and employed. Computer

scientists often view text through the eyes of their particular reading

algorithm, and in the process (voluntarily) blind themselves to the work

practices which have produced and maintain these "resources".

Berners-Lee, in chastising his audience of web publishers to not only publish

online, but to release "unadulterated" data belies a lack of imagination in

considering how language is itself structured and a blindness to the need for

more than additional technical standards to connect to existing publishing

practices.

Last Revision: 2*08*2016

1. ↑ Benjamin Franklin Lieber, Lieber's Standard Telegraphic Code, 1896, New York;

2. ↑ Katherine Hayles, "Technogenesis in Action: Telegraph Code Books and the Place of the Human", How We Think: Digital Media and Contemporary Technogenesis, 2006

3. ↑ Hayles

4. ↑ Lieber's

5. ↑ Hayles

6. ↑ Tim Berners-Lee: The next web, TED Talk, February 2009

7. ↑ "Research on the Web seems to be fashionable these days and I guess I'm no exception." from Brin's [Stanford webpage](http://infolab.stanford.edu/~sergey/)

8. ↑ Extracting Patterns and Relations from the World Wide Web, Sergey Brin, Proceedings of the WebDB Workshop at EDBT 1998,

9. ↑ Dynamic Data Mining: Exploring Large Rule Spaces by Sampling; Sergey Brin and Lawrence Page, 1998; p. 2

10. ↑ Hypertext Markup Language (HTML): "Internet Draft", Tim Berners-Lee and Daniel Connolly, June 1993,

11. ↑

_A talk given on the second day of the conference_ [Off the

Press](http://digitalpublishingtoolkit.org/22-23-may-2014/program/) _held at

WORM, Rotterdam, on May 23, 2014. Also available

in[PDF](/images/2/28/Barok_2014_Communing_Texts.pdf "Barok 2014 Communing

Texts.pdf")._

I am going to talk about publishing in the humanities, including scanning

culture, and its unrealised potentials online. For this I will treat the

internet not only as a platform for storage and distribution but also as a

medium with its own specific means for reading and writing, and consider the

relevance of plain text and its various rendering formats, such as HTML, XML,

markdown, wikitext and TeX.

One of the main reasons why books today are downloaded and bookmarked but

hardly read is the fact that they may contain something relevant but they

begin at the beginning and end at the end; or at least we are used to treat

them in this way. E-book readers and browsers are equipped with fulltext

search functionality but the search for "how does the internet change the way

we read" doesn't yield anything interesting but the diversion of attention.

Whilst there are dozens of books written on this issue. When being insistent,

one easily ends up with a folder with dozens of other books, stucked with how

to read them. There is a plethora of books online, yet there are indeed mostly

machines reading them.

It is surely tempting to celebrate or to despise the age of artificial

intelligence, flat ontology and narrowing down the differences between humans

and machines, and to write books as if only for machines or return to the

analogue, but we may as well look back and reconsider the beauty of simple

linear reading of the age of print, not for nostalgia but for what we can

learn from it.

This perspective implies treating texts in their context, and particularly in

the way they commute, how they are brought in relations with one another, into

a community, by the mere act of writing, through a technique that have

developed over time into what we have came to call _referencing_. While in the

early days referring to texts was practised simply as verbal description of a

referred writing, over millenia it evolved into a technique with standardised

practices and styles, and accordingly: it gained _precision_. This precision

is however nothing machinic, since referring to particular passages in other

texts instead of texts as wholes is an act of comradeship because it spares

the reader time when locating the passage. It also makes apparent that it is

through contexts that the web of printed books has been woven. But even though

referencing in its precision has been meant to be very concrete, particularly

the advent of the web made apparent that it is instead _virtual_. And for the

reader, laborous to follow. The web has shown and taught us that a reference

from one document to another can be plastic. To follow a reference from a

printed book the reader has to stand up, walk down the street to a library,

pick up the referred volume, flip through its pages until the referred one is

found and then follow the text until the passage most probably implied in the

text is identified, while on the web the reader, _ideally_ , merely moves her

finger a few milimeters. To click or tap; the difference between the long way

and the short way is obviously the hyperlink. Of course, in the absence of the

short way, even scholars are used to follow the reference the long way only as

an exception: there was established an unwritten rule to write for readers who

are familiar with literature in the respective field (what in turn reproduces

disciplinarity of the reader and writer), while in the case of unfamiliarity

with referred passage the reader inducts its content by interpreting its

interpretation of the writer. The beauty of reading across references was

never fully realised. But now our question is, can we be so certain that this

practice is still necessary today?

The web silently brought about a way to _implement_ the plasticity of this

pointing although it has not been realised as the legacy of referencing as we

know it from print. Today, when linking a text and having a particular passage

in mind, and even describing it in detail, the majority of links physically

point merely to the beginning of the text. Hyperlinks are linking documents as

wholes by default and the use of anchors in texts has been hardly thought of

as a _requirement_ to enable precise linking.

If we look at popular online journalism and its use of hyperlinks within the

text body we may claim that rarely someone can afford to read all those linked

articles, not even talking about hundreds of pages long reports and the like

and if something is wrong, it would get corrected via comments anyway. On the

internet, the writer is meant to be in more immediate feedback with the

reader. But not always readers are keen to comment and not always they are

allowed to. We may be easily driven to forget that quoting half of the

sentence is never quoting a full sentence, and if there ought to be the entire

quote, its source text in its whole length would need to be quoted. Think of

the quote _information wants to be free_ , which is rarely quoted with its

wider context taken into account. Even factoids, numbers, can be carbon-quoted

but if taken out of the context their meaning can be shaped significantly. The

reason for aversion to follow a reference may well be that we are usually

pointed to begin reading another text from its beginning.

While this is exactly where the practices of linking as on the web and

referencing as in scholarly work may benefit from one another. The question is

_how_ to bring them closer together.

An approach I am going to propose requires a conceptual leap to something we

have not been taught.

For centuries, the primary format of the text has been the page, a vessel, a

medium, a frame containing text embedded between straight, less or more

explicit, horizontal and vertical borders. Even before the material of the

page such as papyrus and paper appeared, the text was already contained in

lines and columns, a structure which we have learnt to perceive as a grid. The

idea of the grid allows us to view text as being structured in lines and

pages, that are in turn in hand if something is to be referred to. Pages are

counted as the distance from the beginning of the book, and lines as the

distance from the beginning of the page. It is not surprising because it is in

accord with inherent quality of its material medium -- a sheet of paper has a

shape which in turn shapes a body of a text. This tradition goes as far as to

the Ancient times and the bookroll in which we indeed find textual grids.

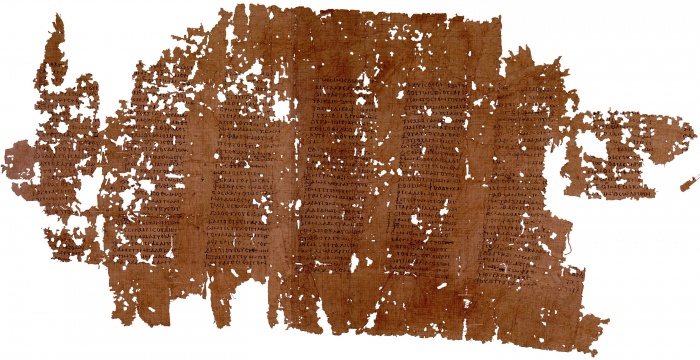

[](/File:Papyrus_of_Plato_Phaedrus.jpg)

A crucial difference between print and digital is that text files such as HTML

documents nor markdown documents nor database-driven texts did inherit this

quality. Their containers are simply not structured into pages, precisely

because of the nature of their materiality as media. Files are written on

memory drives in scattered chunks, beginning at point A and ending at point B

of a drive, continuing from C until D, and so on. Where does each of these

chunks start is ultimately independent from what it contains.

Forensic archaeologists would confirm that when a portion of a text survives,

in the case of ASCII documents it is not a page here and page there, or the

first half of the book, but textual blocks from completely arbitrary places of

the document.

This may sound unrelated to how we, humans, structure our writing in HTML

documents, emails, Office documents, even computer code, but it is a reminder

that we structure them for habitual (interfaces are rectangular) and cultural

(human-readability) reasons rather then for a technical necessity that would

stem from material properties of the medium. This distinction is apparent for

example in HTML, XML, wikitext and TeX documents with their content being both

stored on the physical drive and treated when rendered for reading interfaces

as single flow of text, and the same goes for other texts when treated with

automatic line-break setting turned off. Because line-breaks and spaces and

everything else is merely a number corresponding to a symbol in character set.

So how to address a section in this kind of document? An option offers itself

-- how computers do, or rather how we made them do it -- as a position of the

beginning of the section in the array, in one long line. It would mean to

treat the text document not in its grid-like format but as line, which merely

adapts to properties of its display when rendered. As it is nicely implied in

the animated logo of this event and as we know it from EPUBs for example.

In the case of 'reference-linking' we can refer to a passage by including the

information about its beginning and length determined by the character

position within the text (in analogy to _pp._ operator used for printed

publications) as well as the text version information (in printed texts served

by edition and date of publication). So what is common in printed text as the

page information is here replaced by the character position range and version.

Such a reference-link is more precise while addressing particular section of a

particular version of a document regardless of how it is rendered on an

interface.

It is a relatively simple idea and its implementation does not be seem to be

very hard, although I wonder why it has not been implemented already. I

discussed it with several people yesterday to find out there were indeed

already attempts in this direction. Adam Hyde pointed me to a proposal for

_fuzzy anchors_ presented on the blog of the Hypothes.is initiative last year,

which in order to overcome the need for versioning employs diff algorithms to

locate the referred section, although it is too complicated to be explained in

this setting.[1] Aaaarg has recently implemented in its PDF reader an option

to generate URLs for a particular point in the scanned document which itself

is a great improvement although it treats texts as images, thus being specific

to a particular scan of a book, and generated links are not public URLs.

Using the character position in references requires an agreement on how to

count. There are at least two options. One is to include all source code in

positioning, which means measuring the distance from the anchor such as the

beginning of the text, the beginning of the chapter, or the beginning of the

paragraph. The second option is to make a distinction between operators and

operands, and count only in operands. Here there are further options where to

make the line between them. We can consider as operands only characters with

phonetic properties -- letters, numbers and symbols, stripping the text from

operators that are there to shape sonic and visual rendering of the text such

as whitespaces, commas, periods, HTML and markdown and other tags so that we

are left with the body of the text to count in. This would mean to render

operators unreferrable and count as in _scriptio continua_.

_Scriptio continua_ is a very old example of the linear onedimensional

treatment of the text. Let's look again at the bookroll with Plato's writing.

Even though it is 'designed' into grids on a closer look it reveals the lack

of any other structural elements -- there are no spaces, commas, periods or

line-breaks, the text is merely one flow, one long line.

_Phaedrus_ was written in the fourth century BC (this copy comes from the

second century AD). Word and paragraph separators were reintroduced much

later, between the second and sixth century AD when rolls were gradually

transcribed into codices that were bound as pages and numbered (a dramatic

change in publishing comparable to digital changes today).[2]

'Reference-linking' has not been prominent in discussions about sharing books

online and I only came to realise its significance during my preparations for

this event. There is a tremendous amount of very old, recent and new texts

online but we haven't done much in opening them up to contextual reading. In

this there are publishers of all 'grounds' together.

We are equipped to treat the internet not only as repository and library but

to take into account its potentials of reading that have been hiding in front

of our very eyes. To expand the notion of hyperlink by taking into account

techniques of referencing and to expand the notion of referencing by realising

its plasticity which has always been imagined as if it is there. To mesh texts

with public URLs to enable entaglement of referencing and hyperlinks. Here,

open access gains its further relevance and importance.

Dušan Barok

_Written May 21-23, 2014, in Vienna and Rotterdam. Revised May 28, 2014._

Notes

1. ↑ Proposals for paragraph-based hyperlinking can be traced back to the work of Douglas Engelbart, and today there is a number of related ideas, some of which were implemented on a small scale: fuzzy anchoring, 1(http://hypothes.is/blog/fuzzy-anchoring/); purple numbers, 2(http://project.cim3.net/wiki/PMWX_White_Paper_2008); robust anchors, 3(http://github.com/hypothesis/h/wiki/robust-anchors); _Emphasis_ , 4(http://open.blogs.nytimes.com/2011/01/11/emphasis-update-and-source); and others 5(http://en.wikipedia.org/wiki/Fragment_identifier#Proposals). The dependence on structural elements such as paragraphs is one of their shortcoming making them not suitable for texts with longer paragraphs (e.g. Adorno's _Aesthetic Theory_ ), visual poetry or computer code; another is the requirement to store anchors along the text.

2. ↑ Works which happened not to be of interest at the time ceased to be copied and mostly disappeared. On the book roll and its gradual replacement by the codex see William A. Johnson, "The Ancient Book", in _The Oxford Handbook of Papyrology_ , ed. Roger S. Bagnall, Oxford, 2009, pp 256-281, 6(http://google.com/books?id=6GRcLuc124oC&pg=PA256).

Addendum (June 9)

Arie Altena wrote a [report from the

panel](http://digitalpublishingtoolkit.org/2014/05/off-the-press-report-day-

ii/) published on the website of Digital Publishing Toolkit initiative,

followed by another [summary of the

talk](http://digitalpublishingtoolkit.org/2014/05/dusan-barok-digital-imprint-

the-motion-of-publishing/) by Irina Enache.

The online repository Aaaaarg [has

introduced](http://twitter.com/aaaarg/status/474717492808413184) the

reference-link function in its document viewer, see [an

example](http://aaaaarg.fail/ref/60090008362c07ed5a312cda7d26ecb8#0.102).